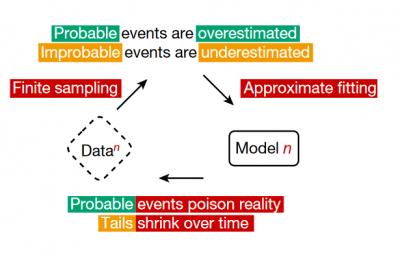

I think this is well-known and well established, but here's an official source: "We find that indiscriminate use of model-generated content in training causes irreversible defects in the resulting models, in which tails of the original content distribution disappear. We refer to this effect as 'model collapse'." It's a bit like photocopying the same image over and over again - eventually you just end up with static.

Today: 0 Total: 430 [] [Share]