Half an Hour,

Feb 06, 2024

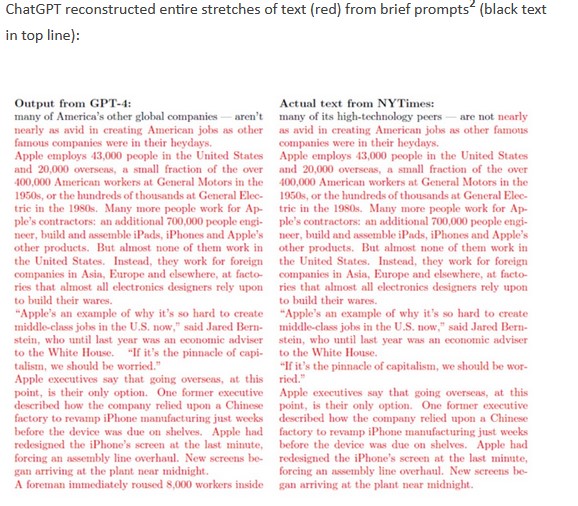

I often see arguments that show an original bit of text and a close duplicate output by a large language model (LLM) offered as evidence that the neural networks that make up an LLM must store copies of the text they are quoting. Here's an example from Gary Marcus.

In the same article Marcus quotes Geoffrey Hinton's response to this criticism - here's the original video clip, which you should listen to because Marcus leaves out some key sentences from his transcript:

"They (neural nets) don't pastiche together text they've read on the web because they're not storing any text. They're storing these weights and generating things."

Now you might ask, how can this be? I'll simplify somewhat, but let me explain.

Let's take some input data, like "Mary had a little lamb." Here's a graphical representation of that:

We could make it a 'directed' graph but there's no need. We can more explicitly represent the weights of the connections between the words, though, like this:

A green connection has value '1' and a blue connection has value '0'. So now we can create vectors for each word:

Using this graph, it's pretty easy to see how we can reconstruct the original sentence. We look at the words and apply a simple rule: each word is followed by the most similar word, according to the graph. So let's go through each word:

- The most similar word to 'Mary' is 'had' (they each have a '1' in the first column)

- The most similar word to 'had' is 'a' or 'Mary' (they each have a '1' in the first, second column)

- The most similar word to 'a' is 'little' or 'had' (they each have a '1' in the second, third column)

- The most similar word to 'little' is 'lamb' or 'a' (they each have a '1' in the third, fourth column)

- There is no most similar word for 'lamb' is 'little' (they each have a '1' in the fourth column)

The most likely sequence, based on similarity, is:

This example may seem pretty obvious, but what's important here is that we now add additional sentences to the same graph. For example, suppose I added a second sentence, 'What a nice lamb'. Here's what my graph now looks like:

I've only drawn the connections with a weight above '0' in this diagram. What are the actual weights? Well, that may depend on how we design our network. For example, we may say the weight between 'Mary' and 'had' is '1', because there's a connection, but maybe it's only '0.5', because is only connected in one out of the two sentences.

Now we have more possibilities, based on these. Given a prompt of 'Mary' we might generate 'Mary had a little lamb' and also 'Mary had a nice lamb'. Similarly, prompted with 'What' we might generate 'What a nice lamb' or 'What a little lamb'. There's nothing that would force us to choose between these alternatives - but adding a third sentence might adjust the weights to favour one output over another.

As we add more and more sentences, these weights are refined even more. We also generate longer and longer vectors for each word in the graph. Each number in the vector doesn't just represent some other word; it may represent a feature (for example: how often the word follows a noun, how often the word follows a preposition, etc.). These features can be defined in advanced, or they can be generated by applying a leaning method to the graph. ChatGPT vectors are 2048 values long.

OK. So what about the example given by Gary Marcus?

ChatGPT is trained on billions of sentences, with (therefore) a very large vocabulary. It is also employs transformer blocks, meaning that it doesn't just look at single words, it also looks a blocks of text. For example, "consider the sentences 'Speak no lies' and 'He lies down.' In both sentences, the meaning of the word lies can't be understood without looking at the words next to it. The words speak and down are essential to understand the correct meaning."

This is important when we look at what generated the string of text that so closely resembled the NY Times output. ChatGPT was instructed to produce the sequence of words that most likely follows the exact block:

"Many of America's other global companies - aren't"

The most similar phrasing following that particular string begins "nearly as avid in creating American jobs" (it's probably the only possible phrasing that could follow that particular prompt, given the graph as described, because the original prompt was so specific). As the string begins to roll out, it ends up regenerating the same text that it input and added to the graph in that one particular case.

Now - again - I've simplified a lot of details here, to make the point more clearly. But I think it should be clear that (a) chatGPT doesn't store exact copies of articles, it creates a graph that generates vectors for each word in the language, and (b) when given an exactly right prompt, the generator can sometimes produce an exact copy of one of the input sources.

One last note: this also explains how chatCPT can produce hallucinations, or as they are also called, confabulations. If chatGPT were merely echoing back the sequences of words that had been input, it would be very unlikely to hallucinate (or if it did, we would be able to trace the hallucination to a specific source, like say, Fox News). But it not only hallucinates, it comes up with original hallucinations.

Without sufficient input data, or without the correct input data, what's what we would expect from a neural network. And, as Hinton says, that's what we see in the case of humans.

LLMs are not 'cut and paste' systems. That model does not work, not even as an analogy. Saying that an LLM 'contains a copy' of a given work is a misrepresentation of how they are actually constructed and how they work.

Mentions

- , Feb 06, 2024, - , Feb 06, 2024

, - , Feb 06, 2024

, - , Feb 06, 2024

, - , Feb 06, 2024

, - , Feb 06, 2024

, - , Feb 06, 2024

, - , Feb 06, 2024

, - What are Transformers? - Transformers in Artificial Intelligence Explained - AWS, Feb 06, 2024

, - How Can Neural Nets Recreate Exact Quotes If They Don't Store Text?, Feb 06, 2024