Half an Hour,

Oct 06, 2023

Matt Crosslin reports having missed one of my posts from last fall in which I comment on something he wrote in his blog, and now that he has seen it, he also reports being "a bit confused by it as well." This post has the objective of making things clearer.

For those who used to follow me on Twitter, I should say, if you're missing my posts, it's because I no longer post on Twitter. Part of the reason for this is that even if you followed me on Twitter, you probably wouldn't be seeing most of what I post (including the one that said I was no longer posting on Twitter). You'd be seeing chaos and disinformation instead. Follow me on Mastodon if you want to see my stuff in real time (or you can use old reliable and open technologies like email or RSS).

November, 2022

OK. To Matt Crosslin's article then.

It's called Is AI Generated Art Really Coming for Your Job? and in general the response he offers is 'no'.

So, is this a cool development that will become a fun tool for many of us to play around with in the future? Sure. Will people use this in their work? Possibly. Will it disrupt artists across the board? Unlikely.

In his follow-up post he wonders why my assessment of his response is 'deny, deny, deny'. It's this. Instead of acknowledging that AI will disrupt artists across the board, his response is to deny that it will happen. My comment is pretty simple, really.

Now, why does he argue that AI will not disrupt artists? It is, he says, because it is not creative.

The big question is: can this technology come up with a unique, truly creative piece of artwork on its own? The answer is still "no."

Now here I would disagree with him as well. But the main point is, he wants to deny that AI can be creative. So, another 'deny' to add to our set. It's still a pretty simple comment.

In his response he vaguely suggests I am misrepresenting his argument (despite the bits I've just quoted). He says,

I'm not sure where the thought that my point was to "deny deny deny" came from, especially since I was just pushing back against some extreme tech-bro hype (some that was claiming that commercial art would be dead in a year – ie November 2023. Guess who was right?).

I will make the point that this is not at all apparent in the original article. He is responding to some Tweets from Ethan Mollick. I'll reproduce the four tweets below because the links are broken in the original post (because Twitter/X is now blocking external API requests, including all those embedded quotes that many people were using). What you should notice is that the statement that "commercial art would be dead in a year" appears neither in the tweets nor in Crosslin's article. So I think I can be forgiven for not having thought he was responding to this specific argument.

What he was pushing back against was the idea that the field of AI-generated art is advancing faster than the field of human-generated art.

There is obviously a lot of improvement in the AI. It actually looks useful now. But saying "a less capable technology is developing faster than a stable dominant technology (human illustration)"…? ... Whoa, now. Time for a reality check. AI art is just now somewhat catching up with where human art has been for hundreds of years.

Yes. Yes it is. Nobody has denied that point ever, I think. The real argument is here:

AI was programmed by people that had thousands of years of artistic development easily available in a textbook. So saying that it is "developing faster"? ... The art field had to blaze trails for thousands of years to get where it is, and the AI versions are just basically cheating to play catch up (and it is still not there yet).

This point is made in various ways not only in Crosslin's two articles but by a large number of pundits across the internet. It is the assertion that AI isn't 'creating', that it is only 'copying', and that the actual creativity comes from humans.

I don't think this is true, and this is the point I tried to illustrate in the second part of my post:

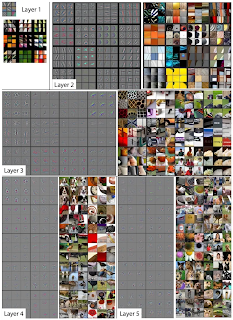

why would anyone suppose AI is limited to being strictly derivative of existing styles? Techniques such as transfer learning allow an AI to combine input from any number of sources, as illustrated, just as a human does.

I picked this example because it was current. Had I been writing the same post today I might have used a slightly different example, that I document in OLDaily:

The argument - and it's a good one - is that AI models need to be trained using human data. Otherwise, there is a danger of 'model collapse'. "Model collapse is important because generative AI is poised to bring about significant change in digital content. More and more online communications are being partially or completely generated using AI tools. Generally, this phenomenon has the potential to create data pollution on a large scale. Although creating large quantities of text is more efficient than ever, model collapse states that none of this data will be valuable to train the next generation of AI models." Image of model collapse: IEEE Spectrum.

It might be tempting to say something like "aha! this proves that he need humans to help AI create content." And at the moment, especially if we want accurate (or realistic) content, we do. But the main issue here is that the AI changes the content. When we feed AI-generated content back into itself, we get something that resembles nothing any human ever created.

Either way, my point would be the same: you can't just deny that AI creates something new. There are many ways for AI to create something new - from the image of an otter on an airplane to application of a model to a novel domain to artwork that becomes more and more abstract over time.

And that's what I think was the greatest weakness in the original blog post, one that I didn't carp on in OLDaily: it simply repeated popular misconceptions about AI that are demonstrably false. Like:

- Every single example of AI artwork so far has been very derivative of specific styles of art, usually on purpose because you have to pick an existing artistic style just to get your images in the first place.

- programmers always have to start with the assumption that people actually know what they want out of the AI generator in the first place.

There are also statements about the 'true' nature of creativity that also seem pretty suspect. Like this:

- the example above of an "otter making pizza in Ancient Rome" is NOT a "novel, interesting way" by the standards that true artists use.

- the average artist can't produce an original painting on the level of Picasso either

- "Draw me a picture of a man holding a penguin" is a sad measure of artistic creativity

- the time consuming part of creating illustrations is figuring out what the human that wants to art… actually wants

Now in all this there are some good points. Your AI artist, if it is working for a client, will still have to have a conversation with the person who is ordering the art about what they want. A lot of clients will want to just leave it up to the AI, the way today they leave it up to the artist. But even today, you can do this. You don't have to tell the AI which style of art to use; if you don't specify, it will just pick one. Just like a human artist. Or maybe make up a novel style. Just like a Picasso.

And there's the good point that not all art is great art. Some of it is 'a man holding a penguin' or 'dogs playing poker'. But what's important to understand here is that that's what most artists do. Almost nobody is a Picasso. Almost all artists get by doing commercial work. This is what AI is already capable of doing, and yes, it will disrupt the lives and workplaces of these commercial artists.

The wrong response is to deny deny deny that this is happening, as Crosslin does in his post. And that's why I said what I said.

October, 2023

It's probably a good question to ask whether artists' lives have been disrupted after a year of ChatGPT and other generative AI technologies. We've just seen the end of a 148 day strike by the Writers Guild of America over fears about what AI is doing to their workplace. I'd count that as 'disrupted' even without noting that the actors are still on strike as I write.

Let's look at Crosslin's follow-up post entitled Deny Deny Deny That AI Rap and Metal Will Ever Mix. There are some interesting things in this post that I would like to highlight, but there are also some defections and some of the same misconceptions as in the original post.

Crosslin begins by taking me to task for asking, "why would anyone suppose AI is limited to being strictly derivative of existing styles?" responding " that is what I have read from the engineers creating it" and then immediately qualifying it with "at least, the ones that don't have all of their grant funding or seed money connected to making AGI a reality". I'm not sure exactly what that leaves in the set of 'AI engineers read by Matt Crosslin' but I suspect it's a pretty odd set. We don't get actual quotes from these engineers, so we'll take it for granted that Crosslin read someone, though we don't know who.

In any case, here's the main counter-point he wants to make:

combining any number of sources like humans is not the same as transcending those sources, You can still be derivative when combining sources after all (something I will touch on in a second).

He also criticizes the transfer-learning example by saying:

any creativity that comes from a transfer learning process would be from the choices of humans, not the AI itself.

The latter point is a bit like saying 'any creativity that comes from Picasso comes from the person who provided him the paint'. It confuses the catalyst with the activity.

But the main problem is that AI is represented as simply 'combining sources'. And there are two problems with this argument:

- It's false - AI doesn't simply combine sources. It performs functions on the input data - for example, by identifying previously unseen patterns or categorizing phenomena in new and unanticipated ways

- It's trivial - there is no case in which we get something from nothing. This doesn't happen in human creativity, and it doesn't happen in AI creativity.

Really, what matters here isn't whether the AI 'combines sources' but rather how the AI combines sources. And here I'll create Crosslin with a nice bit of argumentation as he identifies three distinct types of creativity:

- combinational creativity - where two distinct things are blended, as in the case of 'rap metal'

- explorational creativity - where the model created by one thing is applied, or blended, with the other (as in transfer learning; Crosslin points to Anthrax's Bring the Noise 91 as an example, but reference is meaningless to me (my musical tastes are very different)

- transformational creativity - where "you can at least hear an element of creativity to their music that elevates them above their influences and combined parts" (Crosslin cites Rage Against The Machine, but again, to me it just sounds like derivative noise).

Crosslin writes, "My blog post in question was looking at the possibility that AI has achieved transformational creativity – which is what most people and companies pay creative types to do." I applaud the effort, but the effect is, in the end, to simply deny that AI can be creative in this way.

I have two points:

First, he hasn't told us what 'transformational creativity' is beyond a vague "I know it when I see it" kind of way. It evokes in me the thought that "Any sufficiently advanced technology is indistinguishable from magic." I mean, suppose an AI generated a novel classification of 1600 types of dog barks (from a 24 petabyte dog-bark archive), associated them with musical notes created by 245 distinct Scottish musicians playing the harpsichord, and filtered the resulting sequences for harmonically pleasing variants as measured by feedback from 15,000 Mechanical Turk participants. Would that result in something that is 'transformationally creative'? We don't know - and more significantly, Crosslin has no test except maybe to ask his friends.

This example, by the was, was inspired by what an actual artist, Kate Bush, did to create her album Aerial. Because the second, and more important part of my argument here is that humans and AIs create in fundamentally similar ways. Human's don't just make stuff up out of the aether; they are endlessly influenced by the sights and sounds around them, by their fellow creators, and by all the creativity that came before.

AI today is limited by the data that it can access, by the amount of processing power it can harness, and by the limitations in what sort of things it can create. ChatGPT, for example, until recently, had nothing but a text library to work with. Stable Diffusion had nothing more than a library of digital images. Imagine trying to be creative having never heard a human read a poem or having never seen an actual forest! But as Ethan Mollick said way back at the start, AI is growing much more rapidly than humans. It will eventually access phenomenal experiences we can only imagine, harness more processing capacity than the largest brain, and sculpt its output on a global scale.

But finally, we come to the last (and possibly unintentional) insight in Crosslin's post: whether something is transformational is in the eye of the beholder.

There are actually different ways of expressing this. We could, for example, appeal to a version of information theory whereby we measure the informational content of a message by counting the reduction of the number of possible worlds conceivable by the receiver. "If a highly likely event occurs, the message carries very little information. On the other hand, if a highly unlikely event occurs, the message is much more informative."

Alternatively, we might frame it in terms of pattern recognition. This is the approach I prefer. On this approach, patterns only exist as such if they are perceived. Show a picture of Richard Nixon to a child from China and it's just some old white guy with sweat on his upper lip. Show it to Bob Woodward and he instantly recognizes it as Richard Nixon. The difference between the two lies in how the pattern is perceived - for Woodword, it triggers an already active pattern of neural connectivity, while for the child, it merely triggers some generic patterns.

If something is already familiar, or if the viewer is (like me) unable to detect the nuances in a new order of music, then it's not transformational. It's just ordinary background noise. However, if it it triggers some recognition (for whatever reason) in the listener, and if this feels sufficiently novel (a concept we would need to explore), then it's 'transformative'.

That's why Crosslin goes back again and again to perceptual statements like:

- Fans of the genre routinely mock all AI attempts to create any form of extreme metal for that matter

- But this was the point that many people first noticed it.

- Many people thought it was a pure joke

- chaotic blend of rap and thrash that just blew people's minds back in the day

- maybe I see some transformational creativity there due to bias.

- Sure, you can hear the influences – but you can also hear something else.

- There is an aspect of creativity that is in the eye of the beholder based on what they have beholden before.

There's nothing inherent in an AI that suggests that it cannot create something that is novel to perceives. It might be a while before we have an AI Picasso who astounds they entire art world. But we waited thousands of years for a Picasso to come along in the world of human artists. Based on existing trends, and seeing what generative AI can already do with such limited resources, it seems reasonable to expect that the wait for an AI Picasso will be a lot shorter.

And yes, that would be very disruptive for artists.

Appendix

Tweets from Ethan Mollick.

If you last checked in on AI image makers a month ago & thought "that is a fun toy, but is far from useful…" Well, in just the last week or so two of the major AI systems updated. You can now generate a solid image in one try. For example, "otter on a plane using wifi" 1st try:

(https://twitter.com/emollick/status/1596579802114592770)

This is a classic case of disruptive technology, in the original Clay Christensen sense A less capable technology is developing faster than a stable dominant technology (human illustration), and starting to be able to handle more use cases. Except it is happening very quickly

Ethan Mollick @emollick

Mar 19, 2021

A thread on an important topic that not enough people know about: the s-curve. It shows us how technologies develop, and it helps explain a lot about how and when certain technologies win, and when startups should enter a market. I'll illustrate with the history of the ebook! 1/n

(https://twitter.com/emollick/status/1596581772644569090)

AI image generation can now beat the Lovelace Test, a Turing Test, but for creativity. It challenges AI to equal humans under constrained creativity. Illustrating "an otter making pizza in Ancient Rome" in a novel, interesting way & as well as an average human is a clear pass!

(https://twitter.com/emollick/status/1596680005638975489 with text links to https://twitter.com/emollick/status/1596680005638975489/photo/1 and https://twitter.com/emollick/status/1596680005638975489/photo/3)

Also worth looking at the details in the admittedly goofy otter pictures: the lighting looks correct (even streaming through the windows), everything is placed correctly, including the drink, the composition is varied, etc.

And this is without any attempts to refine the prompts.

(https://twitter.com/emollick/status/1596621251627335680)

Mentions

- Deny Deny Deny That AI Rap and Metal Will Ever Mix – EduGeek Journal, Oct 06, 2023, - Is AI Generated Art Really Coming for Your Job? – EduGeek Journal, Oct 06, 2023

, - , Oct 06, 2023

, - , Oct 06, 2023

, - , Oct 06, 2023

, - , Oct 06, 2023

, - , Oct 06, 2023

, - 2023 Writers Guild of America strike - Wikipedia, Oct 06, 2023

, - Anthrax & Public Enemy - Bring The Noise (Official Video) - YouTube, Oct 06, 2023

, - , Oct 06, 2023

, - , Oct 06, 2023

, - Aerial (album) - Wikipedia, Oct 06, 2023

, - Entropy (information theory) - Wikipedia, Oct 06, 2023

, - , Oct 06, 2023

, - , Oct 06, 2023

, - , Oct 06, 2023

, - , Oct 06, 2023

, - , Oct 06, 2023

, - , Oct 06, 2023

, - , Oct 06, 2023

, - , Oct 06, 2023

, - , Oct 06, 2023

, - , Oct 06, 2023

, - A Less Capable Technology is Developing Faster than a Stable Dominant Technology, Oct 06, 2023