The Q, K, V Matrices

Arpit Bhayani,

Dec 04, 2025

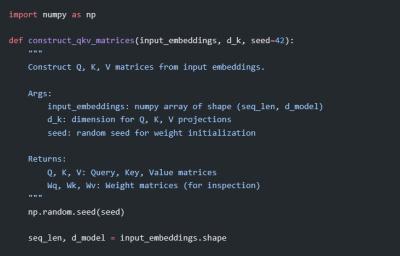

This is a useful reconstruction of the transformer architecture introduced in 2017 describing 'attention' and kicking off what would become the AI revolution stating in 2022. As Arpit Bhayani writes, "at the core of the attention mechanism in LLMs are three matrices: Query, Key, and Value. These matrices are how transformers actually pay attention to different parts of the input." This tells us what words in a sentence matter the most, and allows us to create the three matrices to more accurately predict what should come next. This is why AI isn't going away; look how simple and straightforward this is.

Today: Total: [] [Share]