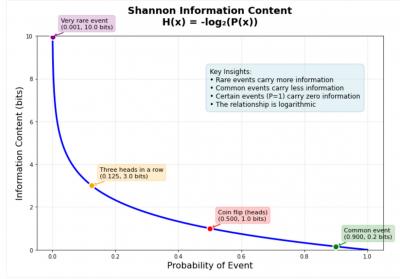

This is a great little article that summarizes the core basis for information theory: "In 1948, Claude Shannon published a paper that changed how we think about information forever... Shannon showed that information could be quantified mathematically by looking at uncertainty and surprise. The information (in bits) of an event is equal to the negative log of the probability of the event. "When an event has probability 1.0 (certainty), it gives you zero information. When an event is extremely rare, it provides high information content." What that means depends on how you interpret probability, but that's a subject for another day.

Today: Total: [] [Share]