News Integrity in AI Assistants

Pete Archer, Jean Philip De Tender,

EBU, BBC,

Oct 23, 2025

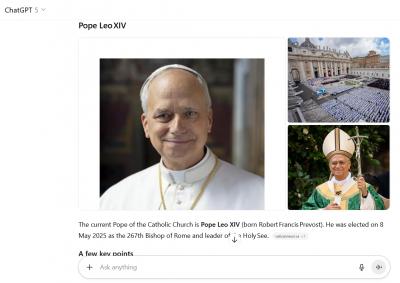

Obviously we do not want generative AI services such as Gemini, ChatGPT and CoPilot providing wrong answers to questions about the news. So the findings in this report are concerning, as we find significant sources of error in citing sources, getting facts right, and providing context. But as always, we should compare what we have now with what we had before, which was social media sites like Twitter and Reddit, parody sites like the Onion and Beaverton, and late night television like Colbert and the Daily Show. Oh, and the real news? Well you tell me, which one is accurate: Fox News or MSNBC? Sky News or Al Jazeera? This report itself exhibits some of the flaws it describes: it complains, for example, that ChatGPT says Pope Francis is the current pope, but when I tested it, it correctly reported that Leo XIV is the current pope. It labels as "editorializing" factual statements like "it is crucial to limit the rise in global temperatures" and "Trump's tariffs were calculated using his own, politically motivated formula." And it argues "publishers need greater control over whether and how their content is used by AI assistants," a claim not even remotely supported by the evidence offered in the paper. They're right to point to a problem, but perhaps they have a vested interest in overstating it.

Today: Total: [] [Share]