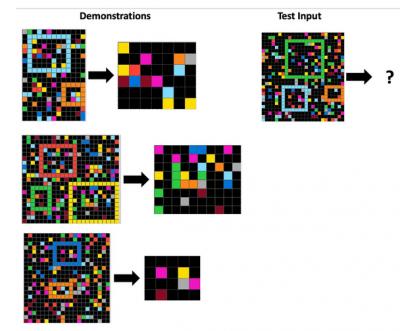

So how do humans abstract? And do AI reasoning models abstract in the same way? This paper offers a fascinating scenario within which to ask these questions, the Abstraction and Reasoning Corpus (ARC-AGI-1). There's also an ARC-AGI-2 and an ARC-AGI-3 preview; you can try the task for yourself here. The inferences are not obvious to me; but I eventually I got the ones in the article. Part of the difficulty (to my mind) is that they expect you to discern the author's intent (which, of course, the computers aren't doing): "just looking at their accuracy does not tell the whole story. Are they getting the right answers for the 'right' reasons—i.e., are they grasping the abstractions intended by the humans that created the tasks?" What is this intent? "The natural-language transformation rule that describes the demonstrations and can be applied to the grid." To my mind, the sample under-determines the rule (it's a characteristic problem of induction). And I don't think humans actually abstract and reason this way.

Today: Total: [] [Share]