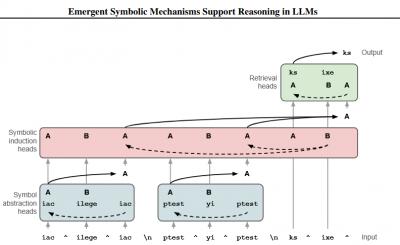

A longstanding critique of neural network methods (aka 'artificial intelligence') is that they cannot reason abstractly (known historically in Chomsky as "Plato's Problem"). This critique persists to the present day, where even 'reasoning' large language models (LLM) aren't reasoning abstractly. This paper (35 page PDF) shows that the mechanisms can be developed using neural networks along; they can learn abstract reasoning by themselves. There's even a remark at the end of the paper suggesting how neural networks might deal with "'content effects', in which reasoning performance is not entirely abstract," as oft-noted in cognitive psychology. I won't pretend to have understood the details of the paper, much less the long exchange between the authors and reviewers in the open review process (that would take years, I suspect) but the paper is clear enough that it's importance is recognizable. Via Scott Leslie, who mutters, "But yeah, sure, 'stochastic parrots' ,,"

Today: Total: [] [Share]