IAB Workshop on AI-CONTROL (aicontrolws)

IETF,

Aug 29, 2024

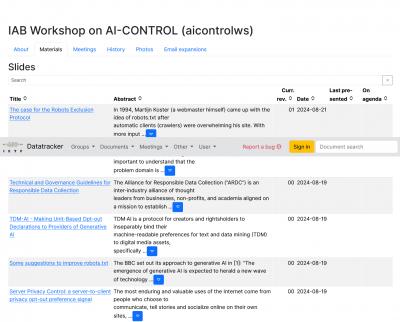

Robots.txt (a.k.a. the Robots Exclision Protocol) is a small file web servers provide to tell web crawlers like the Google search engine where they can search and where they can't. The Internet Engineering Task Force (IETF), which creates the protocols for the internet, is considering the use of robots.txt to manage what crawlers used by AI companies can do. This page is a set of submissions to that task force, including contributions from OpenAI, Creative Commons, the BBC, Elsevier, and more. Most of the submissions are pretty short and all of them are interesting reading. Via Ed Summers.

Today: 0 Total: 437 [] [Share]