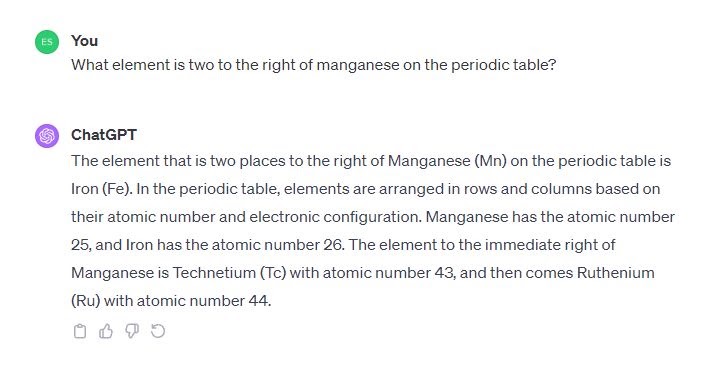

As always, I apply the questions asked about large language models (LLM) to human subjects, such as students. For example: how do we know students (or people in general) have beliefs? This is a quick look at the question. Most telling is how we know artificial intelligences don't have beliefs: "They hallucinate too frequently, they change their answers, they don't consistently enough 'remember' what they earlier committed to, their logical reasoning can be laughably bad... It doesn't have a stable 'opinion'." A 'belief' is, if nothing else, a response from someone we can predict, something recognizable, and something (maybe) grounded.

Today: 25 Total: 328 []