Mar 09, 2009

Originally posted on Half an Hour, March 9, 2009.

The Network Phenomenon: Empiricism and the New Connectionism

Stephen Downes, 1990

(The whole document in MS-Word)

TNP Part IV Previous Post

V. Distributed Representation

A. A First Glance at Distributed Representation

Above, when discussing the "Jets and Sharks" example, I mentioned that the hidden units represent individual people. This representation occurs not in virtue of any property or characteristic of the unit in question, but rather, it occurs in virtue of the connections between the unit in question and other units throughout the network. Another way of saying this is to say that the representation of a given individual is "distributed" across a number of units.

The concept of distributed representation is, first, a completely novel concept, and second, central to the replies to many of the objections which may be raised against empiricism and associationism. For that reason, it is best described clearly and it is best developed in contrast with traditional theories of representation.

B. Representation and the Imagery Debate

Traditional systems of representation are linguistic. What I mean by that is that individuals and properties of individuals are represented by symbols. More complex representations are obtained by combining these symbols. The content of the larger representations is determined exclusively by the contents of the individual symbols and the manner in which they are put together. For example, one such representation may be "Fa&Ga". The meaning of the sentence (that is [a bit controversially], the content) is determined by the meanings of "Fa", "Ga", and the logical connective "&".

There are two defining features to such a theory of representation. [18] First, they are governed by a combinatorial semantics. That means there are no global properties of a representation which are over and above an aggregate of the properties of its atomic components. Second, they are structure sensitive. By that, what I mean is that sentences in the representation may be manipulated strictly according to their form, and without regard to their content.

There are several advantages to traditional representations. First, they are exact. We can state, in clear and precise notation, exactly the content of a given representation. Second, the system is flexible. The symbols "F", "G" and "a" are abstracts and can stand for any properties or individuals. Thus, one small set of rules is applicable to a large number of distinct representations. That explains how we reason with and form new representations.

We can illustrate this latter advantage with an example. Human beings learn a finite number of words. However, since the rules are applicable to any set of words, they can be used to construct an infinite number of sentences. And it appears that human beings have the capacity to construct an infinite number of sentences. Now consider a system of representation which depends strictly on content. Then we would need one rule for each sentence. In order to construct an infinite number of sentences, we would need an infinite number of rules. Since it is implausible that we have an infinite number of rules at our disposal, then, we need rules which contain abstract terms and which apply t0 any number of instances. [19]

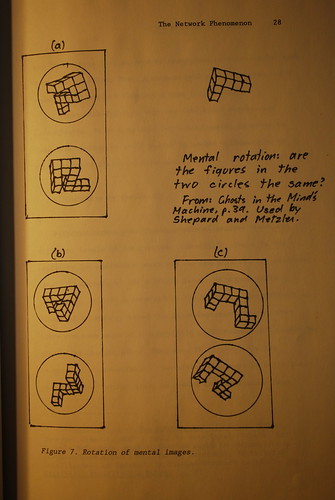

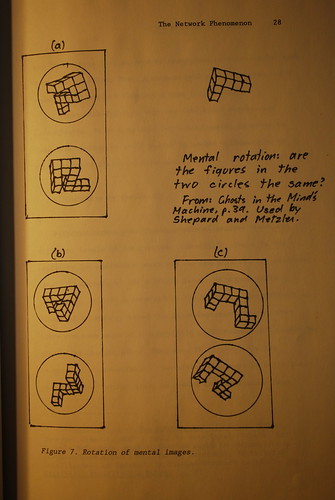

The linguistic theory of representation is often contrasted with what is called the "picture theory" of representation. Picture theories have in common the essential idea that our representations can in some cases consist of mental images or mental pictures. It appears that we manipulate these pictures according to the rules which govern our actual perceptions of similar events. For example, Sheppard's experiment involving the rotation of a mental image suggest that there is a correlation between the time taken to complete such a task and the angle of rotation - just as though we had the object in our head and had to rotate it physically. [20] See figure 7.

Sheppard's experiments are inconclusive, however. Pylyshyn [21] cautions that there is no reason to believe that laws which govern physical objects are the same as those which govern representations of physical objects (he calls the tendency to suppose that they do the "objective pull"). It could be the case, he argues that Sheppard's time-trial results are the result of a repeated series of mathematical calculations. It may be the case that we need to repeat the calculation for each degree of rotation. Thus, a mathematical representation could equally well explain Sheppard's time trial results.

I agree with Pylyshyn that the time trial results are inconclusive. So we must look for other reasons in order to determine whether we would prefer to employ a lingustic or non-linguistic theory of representation.

C. Cognitive Penetrability

Pylyshyn argues that in computational systems there are different levels of description. Essentially, there is the cognitive (or software) level and the physical (or hardware) level. [22] Aspects of the software level, he argues quite reasonably, will be determined by the hardware. The evidence in favour of a language-based theory of representation is that there are some aspect of linguistic performance which cannot be changed by thought alone. Therefore, they must be built into the hardware. Further study is needed to distinguish these essential hardware constraints from accidental hardware constraints, but this need not concern us. [23] The core of the theory is that there are cognitively impenetrable features of representation, and that these features are linguistic.

These features are called the "functional architecture". When I assert that these features are linguistic, I do not mean that they are encoded in a language. Rather, what I mean is that the architecture is designed to be a formal, or principled architecture. It is structure-sensitive and abstract. Perhaps the most widely known functional architecture is Chomsky's "universal grammar" which contains "certain basic properties of the mental representations and rule systems that generate and relate them." [24] Similarly, Fodor proposes a "Language of Thought" which has as its functional architecture a "primitive basis", which includes an innate vocabulary, and from which all representations may be constructed.

The best argument in favour of the functional architecture theory (in my opinion) is the following. In order to have higher-level cognitive functions, it is necessary to have the capacity to describe various phenomena at suitable degrees of abstraction. With respect to cognitive phenomena themselves, these degrees of abstraction are captured by 'folk psychological' terminology - the language of "beliefs", "intentions", "knowledge" and the like (as opposed to descriptions of neural states or some other low level description). The adequacy of folk psychology is easily demonstrated [26] and it is likely that humans employ similarly abstract descriptions in order to use language, do mathematics, etc.

It is important at this time to distinguish between describing a process in abstract terms and regulating a process by abstract rules. There is no doubt that abstractions are useful in description. But, as argued above, connectionist systems which are not governed by abstract rules can nonetheless generalize, and hence, describe in abstract terms. What Chomsky, Fodor and Pylyshyn are arguing is that these abstract processes govern human cognition. This may be a mistake. The tendency to say that rules which may be used to describe process are also those which govern them may b what Johnson-Laird calls the "Symbolic Fallacy".

What is needed, if it is to be argued that abstract rules govern mental representations, is inescapable proof that they do. Pylyshyn opts for a cognitive approach; he attempts to determine those aspects of cognition which cannot be changed by thought alone. The other approach which could be pursued is the biological approach: study brains and see what makes them work. Although I do not believe that psychology begins and ends with the slicing of human brains, I nonetheless favour the biological approach in this instance. For it is arguable that nothing is cognitively impenetrable.

Let me turn directly to the attack, then. If the physical construction of the brain can be affected cognitively, then even if rules are hard-wired, they can be cognitively penetrable. If we allow that "cognitive phenomena" can include experience, then there is substantial experimental support which shows that the construction of the brain can be affected cognitively. There is no reason not to call a perceptual experience a cognitive phenomena. For otherwise, Pylyshyn's argument begs the question, since it is easy, and evidently circular, to oppose empiricism is experience is not one of the cognitive phenomena permitted to exist in the brain.

A series of experiments [has] shown that the physical constitution of the brain is changed by experience and especially by experience in early age. Huber and Weisel showed that a series of rather gruesome stitchings and injuries to cats' eyes changes the pattern of neural connectivity in the visual cortex. [27] Similar phenomena have been observed in humans born with eye disorders. Even after a disorder, such as crossed eyes, has been surgically repaired, the impairment in visual processing continues. Therefore, it is arguable that experience can change the physical constitution of the brain. There is no a priori reason to argue that experience might not also change some high-level processing capacities as well.

Let me suggest further that there is no linguistic aspect of cognition which cannot be changed by thoughts and beliefs. For example, one paradigmatic aspect of linguistic behaviour is that the rules governing the manipulation of a symbol ought to be truth-preserving. Thus, no rule can produce an internally self-contradictory representation. However, my understanding of religious belief leads me to believe that many religious beliefs are inherently self-contradictory. For example, some people really believe that God is all-powerful and yet cannot do some things. If it is possible even to entertain such beliefs, then it is possible at least to entertain the thought that the principle of non-contradiction could be suspended. Therefore, the principle does not act as an all-encompassing constraint on cognition. It is therefore not built into the human brain; it is learned.

Wittgenstein recognized that many supposedly unchangeable aspects of cognition are not, in fact, unchangeable. In On Certainty, Wittgenstein examines those facts and rules which constitute the "foundation" or "framework" of cognition. he concludes, first, that there are always exceptions to be found to those rules, and second, that these rules change ovr time. He employs the analogy of a "riverbed" to describe how these rules, while foundational, may shift over time.

If the rules which govern representations are leaned, and not innate, then it does not follow that it is necessary that these rules ought to follow any given a priori structure. And if this does not follow, then it further does not follow that rules ought to follow a priori rules of linguistic behaviour. Since the rules which govern representations are learned, then, t follows that there is no a priori reason to suppose that human representations are linguistic in form. They could be, but there is no reason to suppose that they must be. The question, then, of what sort of rules representations will follow will not be determined by an analysis of representations in the search of governing a priori principles. We must look at actual representations to see what they are made of and how they work. In a word, we must look at the brain.

D. two Short Objections To Fodor

before looking at brains, I would like in this section [to] propose two short objections to Fodor's thesis that language use is governed by a set of rules which is combinatorial and structure-sensitive.

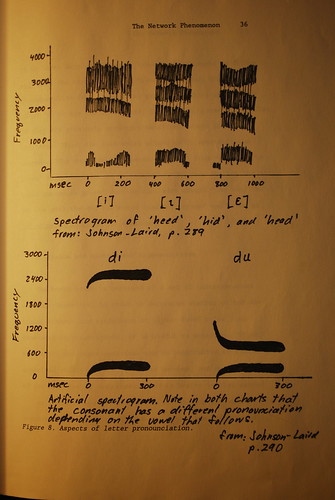

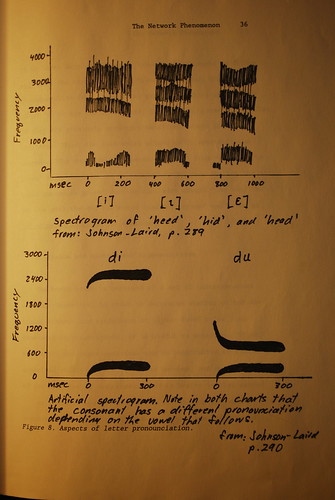

The first objection is that it would be odd if the meaning of sentences was determined strictly by the meanings of their atomic components. The reason why it would be odd is that we do not determine the meaning of words according to the meanings of their atomic components, namely, letters. It is in fact arguable that even the pronunciation of words is not determined strictly according to the pronunciation of individual letters (here I am reminded of Orwell's "ghoti", an alternative spelling for "fish"). Further, just as the pronunciation of individual letters is affected by the word as a whole (the letter "b" is pronounced differently in "but" and "bought" - the shape of the mouth differs in each case), so may also the meaning of a word be affected by the sentence as a whole and the context in which it is uttered. [28] See figure 8.

The second objection is that a strict application of grammatical rules results in absurd sentences. Therefore, something other than grammatical rules governs the construction of sentences. Rice [29] points out that the transformation from "John loves Mary" to "Mary is loved by John" ought to follow only from the transitivity of "loves". However, the transformation from "John loves pizza" to "Pizza is loved by John" is, to say the least, odd. In addition, grammatical rules, if they are genuinely structure-sensitive, ought to be recursive. Thus, the rule which allows us to construct the sentence "The door the boy opened is green" from "The door is green" ought to allow us to construct "The door the boy the girl hated opened is green" and the even more absurd "The door the boy the girl the bot bit hated opened is green" and so on ad infinitum ad absurdum (or whatever).

E. Brains and Distributed Representations

The human brain is composed of a set of interconnected neurons. Therefore, in order to determine whether or not the brain, without the assistance of higher-level rules, can construct representations, it is useful to determine, first, whether or not representations can be constructed in systems comprised solely of interconnected neurons, and second, whether or not they are in fact constructed in brains in that manner.

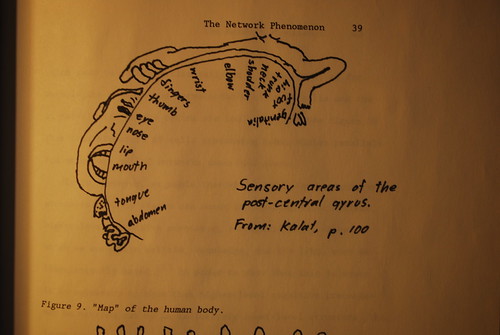

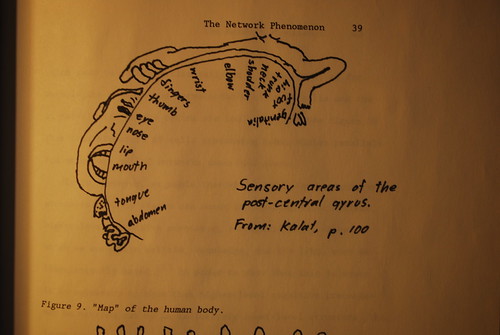

At least at some levels, there is substantial evidence that representations both can be and are stored non-linguistically. Sensory processing systems, routed firs through the thalamus and then into the cerebral cortex, produce not sentences and words, but rather, representational fields (much like Quine's 'quality spaces' [30]) which correspond to the structure of the input sensory modality. For example, the neurons in level IV of the visual cortex are arranged in a single sheet. The relative positions of the neurons on this sheet correspond to the relative positions of the input neurons in the retina. Variations in the number of cells producing output from the retina produce corresponding variations in the arrangement of the cells in V-IV. Similarly, the neurons in the cortex connected to input cells in the ear are arranged according to frequency. In fact, the cells of most of the cerebral cortex may be conceived to be arranged into a "map" of the human body. [31] See figure 9.

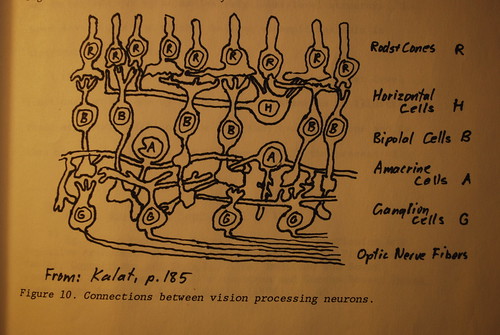

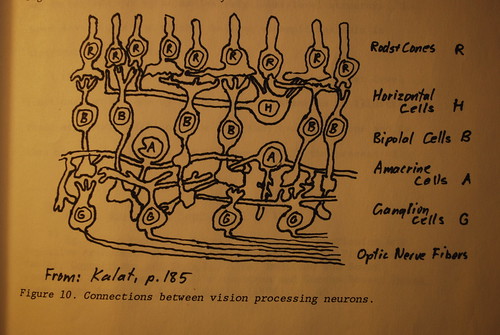

The connections between neurons in the human visual system are just the same as those in the connectionist networks described above. There are essentially two types of connections: excitatory connections, which tend to connect one layer of cells with the next, as for example the connections between retinal cells and ganglia are excitatory; and inhibitory connections, which tend to extend horizontally at a given level, as for example the connections between the horizontal cells and the bi-polar cells in the retina are inhibitory. [32] See figure 10. Thus, the structure of cells processing human vision parallels the structure of IAC networks described above.

It is, however, arguable that while lower-level cognitive processes such as vision can occur in non-linguistic systems, higher-level cognitive processes, and specifically those in which we entertain beliefs, knowledge, and the like, must be linguistically based. [33] In order to show that this is wrong, it is necessary to show that higher-level cognitive processes may be carried out by an exclusively lower-level structure. The lower level structure which I believe accomplishes this is distributed representation.

I think that the best way to show that these higher-level functions can be done by connectionist systems is to illustrate those arguments which conclude that they cannot be done and to show how those arguments are misconstrued. In the process of responding to these objections, I will describe in more detail the idea of distributed representation.

F. Distributed Representations: Objections and Responses

There are two types of objections to distributed representations.

The first of these is outlined by Katz, though Fodor provides a version of it. The idea is that if representations depend only on connected sets of units or neurons, then it is not possible to sort out two distinct representations or two distinct kinds of representations. Katz writes, "Given that two ideas are associated, each with a certain strength of association, we cannot decide whether one has the same meaning as the other, whether they are different in meaning... etc." For example, "ham" and "eggs" are strongly associated, yet we cannot tell whether this is a similarity in meaning or not. [34]

Fodor's argument is similar. Suppose each unit stands for a sentence fragment, for example, "John -" is connected to " - is going to the store." Suppose further that a person at the same time entertains a connection between "Mary -" and " - is going to school". In such a situation, we are unable to determine which connections, such as between "John -" and " - is going to the store" represents a sentence and which, for example "John -" and "Mary -" represents some other association, for example, between brother and sister. [35]

In order to respond to this objection, it is necessary to show that there can be different types of connections. Thus Katz suggests, for example, that there ought to be meaning connections, similarity connections, and the like (I am here using "connection" synonymously with "associations", which is not exactly right, but close enough: think of an association as a set of connections). If there are distinct types of connections, then there is a distinguishable subset of connections, say, meaning-connections or similarity-connections, which exist necessarily in order to distinguish sentences from, say, similarities. But the set of sentence-connections would be just the functional architecture or primitive basis described above.

There is, however, another way to respond to this objection. First, I would like to suggest that sentence-construction and internal representation are different sorts of activities. Sentence-formation is a behavioural or output process, while representation is a cognitive process. the only way to argue against this suggestion is to argue that representations are irreducibly linguistic. Since this is exactly the issue of debate, it will not do to stipulate that representations must be irreducibly linguistic. So it is permissible for me to suggest, at least as a hypothesis, that the two functions are separate.

Now let me consider how representations such as "John -", "Ham", etc. are stored in connectionist systems. [36] It is not the case that they are stored as sentence fragments, as Fodor misleadingly suggests. Rather, a representation of an individual thing, such as "ham" (ignoring such questions as whether we are talking about one ham or ham in general) is a set of connections between one unit at a hidden layer and a number of other units at a different layer. In other words, a representation is a pattern of connectivity. These patterns may be represented as "vectors" of connections between the hidden unit and a matrix of other units at another layer. Therefore, the representation of "ham" is a set fo active and non-active units connected to a given unit and the activation of the representation is the activation of the appropriate neurons in that pattern. It is often convenient to line up the matrix of units and display the vector which corresponds to the hidden units as a series of 1s and 0s according to whether the units are off and on. So the vector for "Ham" could be represented as "10010010010...10010".

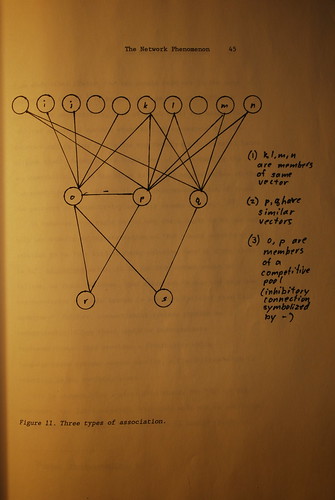

Now we can distinguish between several types of connections. two representations, that is, two units at the hidden level, are "similar" if and only if their vectors of connections are similar. In turn, two representations, that is again, two units at the hidden layer, are in some other way associated is they are both units which constitute part of a vector of a higher layer unit. This is a different sort of association, although the mechanism which produce[s] this distinct type of association is exactly the same as the former. A third type of connection exists between units at a given layer, and that is if the units are clustered with each other via inhibitory connections to form a competitive pool.

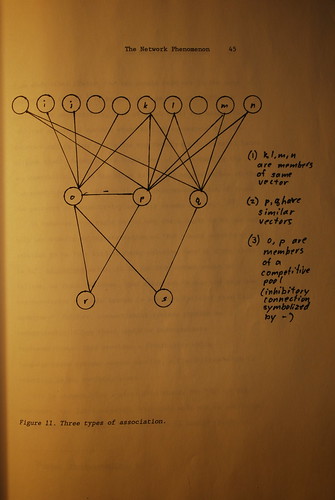

Therefore, there can be three types of association which can be defined in a connectionist system: relations of similarity, which correspond to similarities of vector; relations of category which correspond to competitive pools at a given layer [37]; an conceptual relations, which correspond to two different units being a part of the same vector. It should be evident that there can be many types of conceptual relations, in fact, one conceptual relation for each vector for each unit at the next layer. One type of conceptual relation is membership in the same sentence. How this process occurs will be described below. See figure 11.

The second sort of criticism of distributed representation is that if representations are distributed, then two representations of the same thing, say "Ham", might be different from each other (Fodor: "no two people ever are in the same intentional stance" [38]). Suppose then we have two different pattern of connectivity, each of which we'll say stands for "Fa" (of course, it doesn't stand for, or correspond to, the sentence per se, but that's the way Fodor puts it so we'll leave it like that). According to Fodor, one of these must be the representation, while the other is only an approximation of the representation. The problem lies in determining which of these two patterns of connectivity actually stands for "Fa". [39]

In response, one might wonder why there ought to be one and only one meaning or representation of "Fa". Fodor considers this solution to be "the kind of yucky solution they're crazy about in AI". ad hominem aside, it is far from common-sense to believe, as Fodor believes, that there can be one and only one representation of "John Lennon is a better lyricist than Paul McCartney". Further, connectionist systems provide an understanding of how there could be indeterminate representations. This provides a flexibility which language-based systems cannot provide, a flexibility which is essential in our everyday lives.

Let us suppose that a given unit stands for "Fa" (a bit incorrect, but let's suppose). Then this unit may be activated by the vector "11001100". Yet (and this is easily proven on connectionist systems) even a partially complete or incorrect vector will activate the unit in question. For example, it is easily shown that the unit will be activated by "11001101". Fodor's objection consists in the objection that there is no one vector that is the vector that ought to represent "Fa". But that is like attacking a critic of Platonic forms on the ground that there is no means of determining which of two hand drawn triangles is the triangle.

This is an important concept, and I wish to linger just a moment, for it will surface again. Any given concept, and indeed any given representation, needs not correspond to a particular vector, but rather, may consist of a set of vectors. And further, this set of vectors may not have precise boundaries, for what counts as an instance of a concept may vary according to what other concepts are contained in the system. If, for example, there are three colour concepts, then the colour concept "red" may correspond to a wide set of vectors. On the other hand, if there are eight colour concepts, then the set of vectors which correspond to "red" may be much narrower.

In summary, first, I argue that representational structures are learned; they are not innate. Second, I argue that they are distributed, and not symbolic. And third, I argue that concepts are fuzzy, and not precise. The third is what we would expect were the second true, and the second is what we would expect if the first were true. But my third claim can be empirically tested. It is possible to examine actual human concepts, categories and the like in order to determine whether or not they are vague or fixed. If, as I suspect a rigorous empirical examination will show, they are in fact vague, then, first, I have a confirming instance for my own theory, and second, the language-of-thought theory has a serious difficulty with which it must contend.

TNP Part VI Next Post

[18] See Fodor and Pylyshyn, "Connections and Cognitive Architecture: A Critical Analysis", in Steven Pinker and Jacques Mehler, Connections and Symbols, pp. 12-13.

[19] Pylyshyn's "Dial 911 in the event of a fire" example contains much the same argument. There are mny ways to recognize a fire, and many ways to dial 911. It is implausible that we have a rule for each possible case. Thus, we have a general rule which covers these sorts of situations.

[20] Sheppard's experiments and others are summarized in Kosslyn (et.al) "On the Demystification of Mental Imagery" in Ned Block (editor), Imagery. See also Stephen Kosslyn, Ghosts in the Mind's Machine.

[21] in Block, Imagery.

[22] Zenon Pylyshyn, Computation and Cognition. Pylyshyn does not discuss what I call the data level. Others, for example McCorduck, suggest that we ought to contemplate a further "knowledge" level. Pamela McCorduck, "Artificial Intelligence: An Apercu", in Stephen Graubard (editor), The Artificial Intelligence Debate, p. 75.

[23] For example, one accidental feature of the hardware might be the material that it is built from. Theorists who assert that only humans have the appropriate hardware are called identity theorists. See U.T. Place, "Is Consciousness a Brain Process?", and J.C.C. Smart, "Sensations and Brain Processes", both in V.C. Chappell (editor), The Philosophy of Mind (1962), pp. 101-109 and 160-172 respectively.

[24] Noam Chomsky, "Rules and Representations", Behavioral and Brain Sciences 3 (1980), p. 10.

[25] Jerry Fodor, Language of Thought.

[26] See Jerry Fodor, Psychosemantics, ch. 1.

[27] See James Kalat, Biological Psychology (1988), p. 192.

[28] Philip Johnson-Laird, The Computer and the Mind, p. 290, cites A.M. Liberman (et.al.), "Perception of the Speech Code", Psychological Review 74 (1967) to make the point.

[29] Sally Rice, PhD Thesis, cited Jeff Elman, "Representation in Connectionist Models", Connectionism Conference, Simon Fraser University, 1990.

[30] W.V.O. Quine, Word and Object.

[31] All this is surveyed in P.S. Churchland, neurophilosophy and James Kalat, Biological Psychology.

[32] Kalat, Biological Psychology, p.185.

[33] Eg. Jerry Fodor and Zenon Pylyshyn, "Connectionism and Cognitive Architecture: A Critical Analysis" in S. Pinker and J. Mahler, Connections and Symbols. Also, in Anderson and Bower, Human Associative Memory: A Brief Edition (1980), p. 65.

[34] Jerrold Katz, The Philosophy of Language, ch. 5.

[35] Fodor and Pylyshyn, "Connectionism and Cognitive Architecture", in Connections and Symbols, p. 18.

[36] This account is drawn from Rumelhart and MacClelland, Parallel Distributed Processing, Vol. 2, Ch. 17, and from Hinton and Anderson, Parallel Models of Associative Memory.

[37] Categories are also defined by similarities. This is discussed below.

[38] Fodor, Psychosemantics, p. 57.

[39] Fodor, Psychosemantics.

The Network Phenomenon: Empiricism and the New Connectionism

Stephen Downes, 1990

(The whole document in MS-Word)

TNP Part IV Previous Post

V. Distributed Representation

A. A First Glance at Distributed Representation

Above, when discussing the "Jets and Sharks" example, I mentioned that the hidden units represent individual people. This representation occurs not in virtue of any property or characteristic of the unit in question, but rather, it occurs in virtue of the connections between the unit in question and other units throughout the network. Another way of saying this is to say that the representation of a given individual is "distributed" across a number of units.

The concept of distributed representation is, first, a completely novel concept, and second, central to the replies to many of the objections which may be raised against empiricism and associationism. For that reason, it is best described clearly and it is best developed in contrast with traditional theories of representation.

B. Representation and the Imagery Debate

Traditional systems of representation are linguistic. What I mean by that is that individuals and properties of individuals are represented by symbols. More complex representations are obtained by combining these symbols. The content of the larger representations is determined exclusively by the contents of the individual symbols and the manner in which they are put together. For example, one such representation may be "Fa&Ga". The meaning of the sentence (that is [a bit controversially], the content) is determined by the meanings of "Fa", "Ga", and the logical connective "&".

There are two defining features to such a theory of representation. [18] First, they are governed by a combinatorial semantics. That means there are no global properties of a representation which are over and above an aggregate of the properties of its atomic components. Second, they are structure sensitive. By that, what I mean is that sentences in the representation may be manipulated strictly according to their form, and without regard to their content.

There are several advantages to traditional representations. First, they are exact. We can state, in clear and precise notation, exactly the content of a given representation. Second, the system is flexible. The symbols "F", "G" and "a" are abstracts and can stand for any properties or individuals. Thus, one small set of rules is applicable to a large number of distinct representations. That explains how we reason with and form new representations.

We can illustrate this latter advantage with an example. Human beings learn a finite number of words. However, since the rules are applicable to any set of words, they can be used to construct an infinite number of sentences. And it appears that human beings have the capacity to construct an infinite number of sentences. Now consider a system of representation which depends strictly on content. Then we would need one rule for each sentence. In order to construct an infinite number of sentences, we would need an infinite number of rules. Since it is implausible that we have an infinite number of rules at our disposal, then, we need rules which contain abstract terms and which apply t0 any number of instances. [19]

The linguistic theory of representation is often contrasted with what is called the "picture theory" of representation. Picture theories have in common the essential idea that our representations can in some cases consist of mental images or mental pictures. It appears that we manipulate these pictures according to the rules which govern our actual perceptions of similar events. For example, Sheppard's experiment involving the rotation of a mental image suggest that there is a correlation between the time taken to complete such a task and the angle of rotation - just as though we had the object in our head and had to rotate it physically. [20] See figure 7.

Sheppard's experiments are inconclusive, however. Pylyshyn [21] cautions that there is no reason to believe that laws which govern physical objects are the same as those which govern representations of physical objects (he calls the tendency to suppose that they do the "objective pull"). It could be the case, he argues that Sheppard's time-trial results are the result of a repeated series of mathematical calculations. It may be the case that we need to repeat the calculation for each degree of rotation. Thus, a mathematical representation could equally well explain Sheppard's time trial results.

I agree with Pylyshyn that the time trial results are inconclusive. So we must look for other reasons in order to determine whether we would prefer to employ a lingustic or non-linguistic theory of representation.

C. Cognitive Penetrability

Pylyshyn argues that in computational systems there are different levels of description. Essentially, there is the cognitive (or software) level and the physical (or hardware) level. [22] Aspects of the software level, he argues quite reasonably, will be determined by the hardware. The evidence in favour of a language-based theory of representation is that there are some aspect of linguistic performance which cannot be changed by thought alone. Therefore, they must be built into the hardware. Further study is needed to distinguish these essential hardware constraints from accidental hardware constraints, but this need not concern us. [23] The core of the theory is that there are cognitively impenetrable features of representation, and that these features are linguistic.

These features are called the "functional architecture". When I assert that these features are linguistic, I do not mean that they are encoded in a language. Rather, what I mean is that the architecture is designed to be a formal, or principled architecture. It is structure-sensitive and abstract. Perhaps the most widely known functional architecture is Chomsky's "universal grammar" which contains "certain basic properties of the mental representations and rule systems that generate and relate them." [24] Similarly, Fodor proposes a "Language of Thought" which has as its functional architecture a "primitive basis", which includes an innate vocabulary, and from which all representations may be constructed.

The best argument in favour of the functional architecture theory (in my opinion) is the following. In order to have higher-level cognitive functions, it is necessary to have the capacity to describe various phenomena at suitable degrees of abstraction. With respect to cognitive phenomena themselves, these degrees of abstraction are captured by 'folk psychological' terminology - the language of "beliefs", "intentions", "knowledge" and the like (as opposed to descriptions of neural states or some other low level description). The adequacy of folk psychology is easily demonstrated [26] and it is likely that humans employ similarly abstract descriptions in order to use language, do mathematics, etc.

It is important at this time to distinguish between describing a process in abstract terms and regulating a process by abstract rules. There is no doubt that abstractions are useful in description. But, as argued above, connectionist systems which are not governed by abstract rules can nonetheless generalize, and hence, describe in abstract terms. What Chomsky, Fodor and Pylyshyn are arguing is that these abstract processes govern human cognition. This may be a mistake. The tendency to say that rules which may be used to describe process are also those which govern them may b what Johnson-Laird calls the "Symbolic Fallacy".

What is needed, if it is to be argued that abstract rules govern mental representations, is inescapable proof that they do. Pylyshyn opts for a cognitive approach; he attempts to determine those aspects of cognition which cannot be changed by thought alone. The other approach which could be pursued is the biological approach: study brains and see what makes them work. Although I do not believe that psychology begins and ends with the slicing of human brains, I nonetheless favour the biological approach in this instance. For it is arguable that nothing is cognitively impenetrable.

Let me turn directly to the attack, then. If the physical construction of the brain can be affected cognitively, then even if rules are hard-wired, they can be cognitively penetrable. If we allow that "cognitive phenomena" can include experience, then there is substantial experimental support which shows that the construction of the brain can be affected cognitively. There is no reason not to call a perceptual experience a cognitive phenomena. For otherwise, Pylyshyn's argument begs the question, since it is easy, and evidently circular, to oppose empiricism is experience is not one of the cognitive phenomena permitted to exist in the brain.

A series of experiments [has] shown that the physical constitution of the brain is changed by experience and especially by experience in early age. Huber and Weisel showed that a series of rather gruesome stitchings and injuries to cats' eyes changes the pattern of neural connectivity in the visual cortex. [27] Similar phenomena have been observed in humans born with eye disorders. Even after a disorder, such as crossed eyes, has been surgically repaired, the impairment in visual processing continues. Therefore, it is arguable that experience can change the physical constitution of the brain. There is no a priori reason to argue that experience might not also change some high-level processing capacities as well.

Let me suggest further that there is no linguistic aspect of cognition which cannot be changed by thoughts and beliefs. For example, one paradigmatic aspect of linguistic behaviour is that the rules governing the manipulation of a symbol ought to be truth-preserving. Thus, no rule can produce an internally self-contradictory representation. However, my understanding of religious belief leads me to believe that many religious beliefs are inherently self-contradictory. For example, some people really believe that God is all-powerful and yet cannot do some things. If it is possible even to entertain such beliefs, then it is possible at least to entertain the thought that the principle of non-contradiction could be suspended. Therefore, the principle does not act as an all-encompassing constraint on cognition. It is therefore not built into the human brain; it is learned.

Wittgenstein recognized that many supposedly unchangeable aspects of cognition are not, in fact, unchangeable. In On Certainty, Wittgenstein examines those facts and rules which constitute the "foundation" or "framework" of cognition. he concludes, first, that there are always exceptions to be found to those rules, and second, that these rules change ovr time. He employs the analogy of a "riverbed" to describe how these rules, while foundational, may shift over time.

If the rules which govern representations are leaned, and not innate, then it does not follow that it is necessary that these rules ought to follow any given a priori structure. And if this does not follow, then it further does not follow that rules ought to follow a priori rules of linguistic behaviour. Since the rules which govern representations are learned, then, t follows that there is no a priori reason to suppose that human representations are linguistic in form. They could be, but there is no reason to suppose that they must be. The question, then, of what sort of rules representations will follow will not be determined by an analysis of representations in the search of governing a priori principles. We must look at actual representations to see what they are made of and how they work. In a word, we must look at the brain.

D. two Short Objections To Fodor

before looking at brains, I would like in this section [to] propose two short objections to Fodor's thesis that language use is governed by a set of rules which is combinatorial and structure-sensitive.

The first objection is that it would be odd if the meaning of sentences was determined strictly by the meanings of their atomic components. The reason why it would be odd is that we do not determine the meaning of words according to the meanings of their atomic components, namely, letters. It is in fact arguable that even the pronunciation of words is not determined strictly according to the pronunciation of individual letters (here I am reminded of Orwell's "ghoti", an alternative spelling for "fish"). Further, just as the pronunciation of individual letters is affected by the word as a whole (the letter "b" is pronounced differently in "but" and "bought" - the shape of the mouth differs in each case), so may also the meaning of a word be affected by the sentence as a whole and the context in which it is uttered. [28] See figure 8.

The second objection is that a strict application of grammatical rules results in absurd sentences. Therefore, something other than grammatical rules governs the construction of sentences. Rice [29] points out that the transformation from "John loves Mary" to "Mary is loved by John" ought to follow only from the transitivity of "loves". However, the transformation from "John loves pizza" to "Pizza is loved by John" is, to say the least, odd. In addition, grammatical rules, if they are genuinely structure-sensitive, ought to be recursive. Thus, the rule which allows us to construct the sentence "The door the boy opened is green" from "The door is green" ought to allow us to construct "The door the boy the girl hated opened is green" and the even more absurd "The door the boy the girl the bot bit hated opened is green" and so on ad infinitum ad absurdum (or whatever).

E. Brains and Distributed Representations

The human brain is composed of a set of interconnected neurons. Therefore, in order to determine whether or not the brain, without the assistance of higher-level rules, can construct representations, it is useful to determine, first, whether or not representations can be constructed in systems comprised solely of interconnected neurons, and second, whether or not they are in fact constructed in brains in that manner.

At least at some levels, there is substantial evidence that representations both can be and are stored non-linguistically. Sensory processing systems, routed firs through the thalamus and then into the cerebral cortex, produce not sentences and words, but rather, representational fields (much like Quine's 'quality spaces' [30]) which correspond to the structure of the input sensory modality. For example, the neurons in level IV of the visual cortex are arranged in a single sheet. The relative positions of the neurons on this sheet correspond to the relative positions of the input neurons in the retina. Variations in the number of cells producing output from the retina produce corresponding variations in the arrangement of the cells in V-IV. Similarly, the neurons in the cortex connected to input cells in the ear are arranged according to frequency. In fact, the cells of most of the cerebral cortex may be conceived to be arranged into a "map" of the human body. [31] See figure 9.

The connections between neurons in the human visual system are just the same as those in the connectionist networks described above. There are essentially two types of connections: excitatory connections, which tend to connect one layer of cells with the next, as for example the connections between retinal cells and ganglia are excitatory; and inhibitory connections, which tend to extend horizontally at a given level, as for example the connections between the horizontal cells and the bi-polar cells in the retina are inhibitory. [32] See figure 10. Thus, the structure of cells processing human vision parallels the structure of IAC networks described above.

It is, however, arguable that while lower-level cognitive processes such as vision can occur in non-linguistic systems, higher-level cognitive processes, and specifically those in which we entertain beliefs, knowledge, and the like, must be linguistically based. [33] In order to show that this is wrong, it is necessary to show that higher-level cognitive processes may be carried out by an exclusively lower-level structure. The lower level structure which I believe accomplishes this is distributed representation.

I think that the best way to show that these higher-level functions can be done by connectionist systems is to illustrate those arguments which conclude that they cannot be done and to show how those arguments are misconstrued. In the process of responding to these objections, I will describe in more detail the idea of distributed representation.

F. Distributed Representations: Objections and Responses

There are two types of objections to distributed representations.

The first of these is outlined by Katz, though Fodor provides a version of it. The idea is that if representations depend only on connected sets of units or neurons, then it is not possible to sort out two distinct representations or two distinct kinds of representations. Katz writes, "Given that two ideas are associated, each with a certain strength of association, we cannot decide whether one has the same meaning as the other, whether they are different in meaning... etc." For example, "ham" and "eggs" are strongly associated, yet we cannot tell whether this is a similarity in meaning or not. [34]

Fodor's argument is similar. Suppose each unit stands for a sentence fragment, for example, "John -" is connected to " - is going to the store." Suppose further that a person at the same time entertains a connection between "Mary -" and " - is going to school". In such a situation, we are unable to determine which connections, such as between "John -" and " - is going to the store" represents a sentence and which, for example "John -" and "Mary -" represents some other association, for example, between brother and sister. [35]

In order to respond to this objection, it is necessary to show that there can be different types of connections. Thus Katz suggests, for example, that there ought to be meaning connections, similarity connections, and the like (I am here using "connection" synonymously with "associations", which is not exactly right, but close enough: think of an association as a set of connections). If there are distinct types of connections, then there is a distinguishable subset of connections, say, meaning-connections or similarity-connections, which exist necessarily in order to distinguish sentences from, say, similarities. But the set of sentence-connections would be just the functional architecture or primitive basis described above.

There is, however, another way to respond to this objection. First, I would like to suggest that sentence-construction and internal representation are different sorts of activities. Sentence-formation is a behavioural or output process, while representation is a cognitive process. the only way to argue against this suggestion is to argue that representations are irreducibly linguistic. Since this is exactly the issue of debate, it will not do to stipulate that representations must be irreducibly linguistic. So it is permissible for me to suggest, at least as a hypothesis, that the two functions are separate.

Now let me consider how representations such as "John -", "Ham", etc. are stored in connectionist systems. [36] It is not the case that they are stored as sentence fragments, as Fodor misleadingly suggests. Rather, a representation of an individual thing, such as "ham" (ignoring such questions as whether we are talking about one ham or ham in general) is a set of connections between one unit at a hidden layer and a number of other units at a different layer. In other words, a representation is a pattern of connectivity. These patterns may be represented as "vectors" of connections between the hidden unit and a matrix of other units at another layer. Therefore, the representation of "ham" is a set fo active and non-active units connected to a given unit and the activation of the representation is the activation of the appropriate neurons in that pattern. It is often convenient to line up the matrix of units and display the vector which corresponds to the hidden units as a series of 1s and 0s according to whether the units are off and on. So the vector for "Ham" could be represented as "10010010010...10010".

Now we can distinguish between several types of connections. two representations, that is, two units at the hidden level, are "similar" if and only if their vectors of connections are similar. In turn, two representations, that is again, two units at the hidden layer, are in some other way associated is they are both units which constitute part of a vector of a higher layer unit. This is a different sort of association, although the mechanism which produce[s] this distinct type of association is exactly the same as the former. A third type of connection exists between units at a given layer, and that is if the units are clustered with each other via inhibitory connections to form a competitive pool.

Therefore, there can be three types of association which can be defined in a connectionist system: relations of similarity, which correspond to similarities of vector; relations of category which correspond to competitive pools at a given layer [37]; an conceptual relations, which correspond to two different units being a part of the same vector. It should be evident that there can be many types of conceptual relations, in fact, one conceptual relation for each vector for each unit at the next layer. One type of conceptual relation is membership in the same sentence. How this process occurs will be described below. See figure 11.

The second sort of criticism of distributed representation is that if representations are distributed, then two representations of the same thing, say "Ham", might be different from each other (Fodor: "no two people ever are in the same intentional stance" [38]). Suppose then we have two different pattern of connectivity, each of which we'll say stands for "Fa" (of course, it doesn't stand for, or correspond to, the sentence per se, but that's the way Fodor puts it so we'll leave it like that). According to Fodor, one of these must be the representation, while the other is only an approximation of the representation. The problem lies in determining which of these two patterns of connectivity actually stands for "Fa". [39]

In response, one might wonder why there ought to be one and only one meaning or representation of "Fa". Fodor considers this solution to be "the kind of yucky solution they're crazy about in AI". ad hominem aside, it is far from common-sense to believe, as Fodor believes, that there can be one and only one representation of "John Lennon is a better lyricist than Paul McCartney". Further, connectionist systems provide an understanding of how there could be indeterminate representations. This provides a flexibility which language-based systems cannot provide, a flexibility which is essential in our everyday lives.

Let us suppose that a given unit stands for "Fa" (a bit incorrect, but let's suppose). Then this unit may be activated by the vector "11001100". Yet (and this is easily proven on connectionist systems) even a partially complete or incorrect vector will activate the unit in question. For example, it is easily shown that the unit will be activated by "11001101". Fodor's objection consists in the objection that there is no one vector that is the vector that ought to represent "Fa". But that is like attacking a critic of Platonic forms on the ground that there is no means of determining which of two hand drawn triangles is the triangle.

This is an important concept, and I wish to linger just a moment, for it will surface again. Any given concept, and indeed any given representation, needs not correspond to a particular vector, but rather, may consist of a set of vectors. And further, this set of vectors may not have precise boundaries, for what counts as an instance of a concept may vary according to what other concepts are contained in the system. If, for example, there are three colour concepts, then the colour concept "red" may correspond to a wide set of vectors. On the other hand, if there are eight colour concepts, then the set of vectors which correspond to "red" may be much narrower.

In summary, first, I argue that representational structures are learned; they are not innate. Second, I argue that they are distributed, and not symbolic. And third, I argue that concepts are fuzzy, and not precise. The third is what we would expect were the second true, and the second is what we would expect if the first were true. But my third claim can be empirically tested. It is possible to examine actual human concepts, categories and the like in order to determine whether or not they are vague or fixed. If, as I suspect a rigorous empirical examination will show, they are in fact vague, then, first, I have a confirming instance for my own theory, and second, the language-of-thought theory has a serious difficulty with which it must contend.

TNP Part VI Next Post

[18] See Fodor and Pylyshyn, "Connections and Cognitive Architecture: A Critical Analysis", in Steven Pinker and Jacques Mehler, Connections and Symbols, pp. 12-13.

[19] Pylyshyn's "Dial 911 in the event of a fire" example contains much the same argument. There are mny ways to recognize a fire, and many ways to dial 911. It is implausible that we have a rule for each possible case. Thus, we have a general rule which covers these sorts of situations.

[20] Sheppard's experiments and others are summarized in Kosslyn (et.al) "On the Demystification of Mental Imagery" in Ned Block (editor), Imagery. See also Stephen Kosslyn, Ghosts in the Mind's Machine.

[21] in Block, Imagery.

[22] Zenon Pylyshyn, Computation and Cognition. Pylyshyn does not discuss what I call the data level. Others, for example McCorduck, suggest that we ought to contemplate a further "knowledge" level. Pamela McCorduck, "Artificial Intelligence: An Apercu", in Stephen Graubard (editor), The Artificial Intelligence Debate, p. 75.

[23] For example, one accidental feature of the hardware might be the material that it is built from. Theorists who assert that only humans have the appropriate hardware are called identity theorists. See U.T. Place, "Is Consciousness a Brain Process?", and J.C.C. Smart, "Sensations and Brain Processes", both in V.C. Chappell (editor), The Philosophy of Mind (1962), pp. 101-109 and 160-172 respectively.

[24] Noam Chomsky, "Rules and Representations", Behavioral and Brain Sciences 3 (1980), p. 10.

[25] Jerry Fodor, Language of Thought.

[26] See Jerry Fodor, Psychosemantics, ch. 1.

[27] See James Kalat, Biological Psychology (1988), p. 192.

[28] Philip Johnson-Laird, The Computer and the Mind, p. 290, cites A.M. Liberman (et.al.), "Perception of the Speech Code", Psychological Review 74 (1967) to make the point.

[29] Sally Rice, PhD Thesis, cited Jeff Elman, "Representation in Connectionist Models", Connectionism Conference, Simon Fraser University, 1990.

[30] W.V.O. Quine, Word and Object.

[31] All this is surveyed in P.S. Churchland, neurophilosophy and James Kalat, Biological Psychology.

[32] Kalat, Biological Psychology, p.185.

[33] Eg. Jerry Fodor and Zenon Pylyshyn, "Connectionism and Cognitive Architecture: A Critical Analysis" in S. Pinker and J. Mahler, Connections and Symbols. Also, in Anderson and Bower, Human Associative Memory: A Brief Edition (1980), p. 65.

[34] Jerrold Katz, The Philosophy of Language, ch. 5.

[35] Fodor and Pylyshyn, "Connectionism and Cognitive Architecture", in Connections and Symbols, p. 18.

[36] This account is drawn from Rumelhart and MacClelland, Parallel Distributed Processing, Vol. 2, Ch. 17, and from Hinton and Anderson, Parallel Models of Associative Memory.

[37] Categories are also defined by similarities. This is discussed below.

[38] Fodor, Psychosemantics, p. 57.

[39] Fodor, Psychosemantics.