Apr 05, 2010

Originally posted on Half an Hour, April 5, 2010.

I'm going to use an oversimplified example from electricity to make a point. I still think there is a deficiency in the personal knowledge management model being discussed in various quarters. Let me see if I can tease it out with the following discussion.

Harold Jarche points to a diagram Silvia Andreoli adds to his last post on personal knowledge management. Here it is:

Now the activity happening at the centre is becoming more sophisticated, with an expanded list of processes happening, to convert data into knowledge. I don't want to focus on the particular types of activity - that's just mechanics. I am more concerned on what might be called the 'flow' of information from data to knowledge.

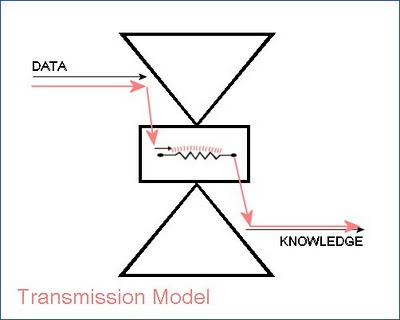

So let me strip down the details and present an abstract version of the model.

In a nutshell: does the data itself become knowledge, or does the data lead to something else becoming knowledge? Let me use my electrical analogy to make the point.

In what might be called the 'naive model' (not disparagingly) we have a direct circuit from input (data) to output (knowledge). The purpose of the process in the middle is to filter, transform, reshape, and otherwise improve the data, but ultimately, to pass it along. Like this:

Now presumably, what is happening here is the data is coming in from outside the person and the knowledge is being stored or in some way impressed in the head or mind; there may in addition be an output in the form of a transmission or creative act, producing the freshly minded data as publicly accessible 'knowledge'.

But I'm not at all sure this is the correct model. I don't think there is a direct flow from data to knowledge. My model looks more like this:

What we have here is a model where the input data induces the creation of knowledge. There is no direct flow from input to output; rather, the input acts on the pre-existing system, and it is the pre-existing system that produces the output.

In electricity, this is known as induction, and is a common phenomenon. We use induction to build step-up or step-down transformers, to power electric motors, and more. Basically, the way induction works is that, first, an electric current produces a magnetic field, and second, a magnetic field creates an electric current.

Why is this significant? Because the inductive model (not the greatest name in the world, but a good alternative to the transmission model) depends on the existing structure of the receiving circuit, what it means is that the knowledge output may vary from system to system (person to person) depending on the pre-existing configuration of that circuit.

What it means is that you can't just apply some sort of standard recipe and get the same output. Whatever combination of filtering, validation, synthesis and all the rest you use, the resulting knowledge will be different for each person. Or alternatively, if you want the same knowledge to be output for each person (were that even possible), you would have to use a different combination of filtering, validation, synthesis for each person.

That's why personal knowledge is personal. Each person, individually, presumably attempting to approximate the production of knowledge output exhibited by other people who know (where this knowledge output may be as simple as the recitation of a fact or as complex as a set of expert behaviours in a knowing community), must select an individual set of filtering, validation, synthesis, etc., activities.

And probably, the best (and only) person who can make this selection is the person him or her self, because only the person in question knows and can make adjustments to the internal circuit in order to produce the desired output. That doesn't mean we can't suggest, demonstrate, or in other ways mediate these adjustments.

So why do I think the induction model is more likely to be correct than the transmission model?

What characterizes induction is a field shift. Though we can track the flow of energy from input to output (which is why no causal laws are broken) the type of energy changes from electrical to magnetic and back. Hence, the carriers of the energy, the individual electrons, never connect from beginning to end.

A similar sort of field shift happens in knowledge transmission. When we think, we convert from complex neural structures to words. We output these words, and it is these words that constitute (in part) the data that enters the system (other forms of data - other audio-visual inputs, are also present). This data, in the process of becoming knowledge, is not stored as the physical inputs (we do not literally store sounds in our brains) nor even echoes of them.

Rather, what happens is that, as the cascading waves of sensory input diffuse through our neural net, they have a secondary, inductive effect of adjusting the set of pre-existing neural connections in the brain. It is this set of neural connections that constitutes knowledge, not the set of signals, however processed and filtered, that interacted upon them.

At a certain gross level this should be pretty obvious. When we examine the brain, we do not detect sounds or images, nor even (beyond the most basic sort) echo-like constructions or neural arrangements that correspond to them. Nor either do we detect sentences, syntical-like structures, or anything similar. Therefore, whatever knowledge is, it has undergone a field shift.

But we do infer to the existence of such structures, and we infer to them on the basis of what appear to be obvious productions of knowledge. Not only can we write text, draw pictures, and speak descriptions, we have actual memories and dreams that have the same phenomenal qualities as those we experienced in the first place. This would not be possible (to echo Chomsky) were they not stored in the mind in the first place. Would it?

Only if there are no field shifts. But if there are field shifts (which would explain why we cannot observe in the brain what we so obviously experience in the having of one) then the production of dreams, memories, verbal utterances, and other behaviours constitutes the reverse field change. It's like converting the magnetism back to electricity again.

Our dreams, memories, thoughts and behaviours aren't stored in the brain and the re-presented. They are built from scratch again as a result of the functioning of the neural network. A memory isn't the same experience which is had a second time. It is a new experience.

That's why we misremember, have fanciful dreams, see things as we want to see, and all the rest. When we are recreating the phenomenal experience, this recreation is affected by all number of factors, all the other elements of the neural net, the configuration of the pre-existing circuit.

We can draw numerous lessons from this, and I have drawn them in other posts. That we do not remember 'facts', for example. That knowledge is 'grown' through the growing of our neural network, rather than accumulation or construction or any of the theories that do not incorporate a field shift. And the rest, which I won't reiterate here.

Why this is important for the present purposes is that it changes our approach to the sorts of activities postulated to take place in personal knowledge management, the filtering, validation, synthesis and all the rest. Because we now have two points of view from which we can regard these activities:

- from the perspective of the content on which they operate, or

- from the perspective of the person that is doing the operating.

To put the distinction very crassly, we could say that, on the one view, the content constitutes the knowledge, while on the other hand, we could say that the operation constitutes the knowledge. This latter view, which can be classified under the head of operationalist theories of knowledge, is more representative of the inductivist approach.

The paradigm case here is mathematical knowledge. In what does a knowledge of mathematics consist? A typically realist interpretation of mathematics will say something like, "there are such thing as mathematical objects, and there is a set of facts that describes those objects, and mathematical knowledge consists of the acquisition, or at the very least, the internalization, of those facts."

An operationalist interpretation of mathematics, by contrast, remains silent on the question of the existence of mathematical objects, and interprets mathematical knowledge as corresponding (for lack of a better work) to the operations typical of mathematics. The number 'four' is tantemount to an act of counting, "one - two - three - four." The act of addition is tantemou8nt to the act of putting one pile of beans in the same place as another pile of beans, and then counting all of them (a short though critical account of Kitcher-Mill can be found here).

When we place the locus of knowledge, not in the content, but in the person, then the content becomes essentially nothing more than the raw material on which the learning practice will occur. What matters is not the semantical referent of the input data, but rather, the act (or operation) of filtering, validation, synthesis, etc., that takes place on that content.

Whe we say something like "words are things we use to think" we should understand this in the sense of "paint is something we use to imagine" or "sand is something we use to tell time". Time is not in the sand, imagination is not in the paint, and thought is not in the words. These are just raw materials we use to stimulate an inductive process - we can generate a field shift from thought to sand to thought again.

Even when you are explicitly teaching content, and when what appears to be learned is content, since the content itself never persists from the initial presentation of that content to the ultimate reproduction of that content, what you are teaching is not the content. Rather, what you are trying to induce is a neural state such that, when presented with similar phenomena in the future, will present similar output. Understanding that you are train a neural net, rather than store content for reproduction, is key.

I'm going to use an oversimplified example from electricity to make a point. I still think there is a deficiency in the personal knowledge management model being discussed in various quarters. Let me see if I can tease it out with the following discussion.

Harold Jarche points to a diagram Silvia Andreoli adds to his last post on personal knowledge management. Here it is:

Now the activity happening at the centre is becoming more sophisticated, with an expanded list of processes happening, to convert data into knowledge. I don't want to focus on the particular types of activity - that's just mechanics. I am more concerned on what might be called the 'flow' of information from data to knowledge.

So let me strip down the details and present an abstract version of the model.

In a nutshell: does the data itself become knowledge, or does the data lead to something else becoming knowledge? Let me use my electrical analogy to make the point.

In what might be called the 'naive model' (not disparagingly) we have a direct circuit from input (data) to output (knowledge). The purpose of the process in the middle is to filter, transform, reshape, and otherwise improve the data, but ultimately, to pass it along. Like this:

Now presumably, what is happening here is the data is coming in from outside the person and the knowledge is being stored or in some way impressed in the head or mind; there may in addition be an output in the form of a transmission or creative act, producing the freshly minded data as publicly accessible 'knowledge'.

But I'm not at all sure this is the correct model. I don't think there is a direct flow from data to knowledge. My model looks more like this:

What we have here is a model where the input data induces the creation of knowledge. There is no direct flow from input to output; rather, the input acts on the pre-existing system, and it is the pre-existing system that produces the output.

In electricity, this is known as induction, and is a common phenomenon. We use induction to build step-up or step-down transformers, to power electric motors, and more. Basically, the way induction works is that, first, an electric current produces a magnetic field, and second, a magnetic field creates an electric current.

Why is this significant? Because the inductive model (not the greatest name in the world, but a good alternative to the transmission model) depends on the existing structure of the receiving circuit, what it means is that the knowledge output may vary from system to system (person to person) depending on the pre-existing configuration of that circuit.

What it means is that you can't just apply some sort of standard recipe and get the same output. Whatever combination of filtering, validation, synthesis and all the rest you use, the resulting knowledge will be different for each person. Or alternatively, if you want the same knowledge to be output for each person (were that even possible), you would have to use a different combination of filtering, validation, synthesis for each person.

That's why personal knowledge is personal. Each person, individually, presumably attempting to approximate the production of knowledge output exhibited by other people who know (where this knowledge output may be as simple as the recitation of a fact or as complex as a set of expert behaviours in a knowing community), must select an individual set of filtering, validation, synthesis, etc., activities.

And probably, the best (and only) person who can make this selection is the person him or her self, because only the person in question knows and can make adjustments to the internal circuit in order to produce the desired output. That doesn't mean we can't suggest, demonstrate, or in other ways mediate these adjustments.

So why do I think the induction model is more likely to be correct than the transmission model?

What characterizes induction is a field shift. Though we can track the flow of energy from input to output (which is why no causal laws are broken) the type of energy changes from electrical to magnetic and back. Hence, the carriers of the energy, the individual electrons, never connect from beginning to end.

A similar sort of field shift happens in knowledge transmission. When we think, we convert from complex neural structures to words. We output these words, and it is these words that constitute (in part) the data that enters the system (other forms of data - other audio-visual inputs, are also present). This data, in the process of becoming knowledge, is not stored as the physical inputs (we do not literally store sounds in our brains) nor even echoes of them.

Rather, what happens is that, as the cascading waves of sensory input diffuse through our neural net, they have a secondary, inductive effect of adjusting the set of pre-existing neural connections in the brain. It is this set of neural connections that constitutes knowledge, not the set of signals, however processed and filtered, that interacted upon them.

At a certain gross level this should be pretty obvious. When we examine the brain, we do not detect sounds or images, nor even (beyond the most basic sort) echo-like constructions or neural arrangements that correspond to them. Nor either do we detect sentences, syntical-like structures, or anything similar. Therefore, whatever knowledge is, it has undergone a field shift.

But we do infer to the existence of such structures, and we infer to them on the basis of what appear to be obvious productions of knowledge. Not only can we write text, draw pictures, and speak descriptions, we have actual memories and dreams that have the same phenomenal qualities as those we experienced in the first place. This would not be possible (to echo Chomsky) were they not stored in the mind in the first place. Would it?

Only if there are no field shifts. But if there are field shifts (which would explain why we cannot observe in the brain what we so obviously experience in the having of one) then the production of dreams, memories, verbal utterances, and other behaviours constitutes the reverse field change. It's like converting the magnetism back to electricity again.

Our dreams, memories, thoughts and behaviours aren't stored in the brain and the re-presented. They are built from scratch again as a result of the functioning of the neural network. A memory isn't the same experience which is had a second time. It is a new experience.

That's why we misremember, have fanciful dreams, see things as we want to see, and all the rest. When we are recreating the phenomenal experience, this recreation is affected by all number of factors, all the other elements of the neural net, the configuration of the pre-existing circuit.

We can draw numerous lessons from this, and I have drawn them in other posts. That we do not remember 'facts', for example. That knowledge is 'grown' through the growing of our neural network, rather than accumulation or construction or any of the theories that do not incorporate a field shift. And the rest, which I won't reiterate here.

Why this is important for the present purposes is that it changes our approach to the sorts of activities postulated to take place in personal knowledge management, the filtering, validation, synthesis and all the rest. Because we now have two points of view from which we can regard these activities:

- from the perspective of the content on which they operate, or

- from the perspective of the person that is doing the operating.

To put the distinction very crassly, we could say that, on the one view, the content constitutes the knowledge, while on the other hand, we could say that the operation constitutes the knowledge. This latter view, which can be classified under the head of operationalist theories of knowledge, is more representative of the inductivist approach.

The paradigm case here is mathematical knowledge. In what does a knowledge of mathematics consist? A typically realist interpretation of mathematics will say something like, "there are such thing as mathematical objects, and there is a set of facts that describes those objects, and mathematical knowledge consists of the acquisition, or at the very least, the internalization, of those facts."

An operationalist interpretation of mathematics, by contrast, remains silent on the question of the existence of mathematical objects, and interprets mathematical knowledge as corresponding (for lack of a better work) to the operations typical of mathematics. The number 'four' is tantemount to an act of counting, "one - two - three - four." The act of addition is tantemou8nt to the act of putting one pile of beans in the same place as another pile of beans, and then counting all of them (a short though critical account of Kitcher-Mill can be found here).

When we place the locus of knowledge, not in the content, but in the person, then the content becomes essentially nothing more than the raw material on which the learning practice will occur. What matters is not the semantical referent of the input data, but rather, the act (or operation) of filtering, validation, synthesis, etc., that takes place on that content.

Whe we say something like "words are things we use to think" we should understand this in the sense of "paint is something we use to imagine" or "sand is something we use to tell time". Time is not in the sand, imagination is not in the paint, and thought is not in the words. These are just raw materials we use to stimulate an inductive process - we can generate a field shift from thought to sand to thought again.

Even when you are explicitly teaching content, and when what appears to be learned is content, since the content itself never persists from the initial presentation of that content to the ultimate reproduction of that content, what you are teaching is not the content. Rather, what you are trying to induce is a neural state such that, when presented with similar phenomena in the future, will present similar output. Understanding that you are train a neural net, rather than store content for reproduction, is key.