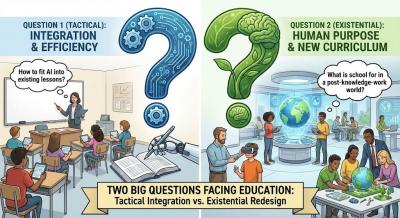

I think the basic premise is right: "there are two boxes — How to Use AI in the current curriculum and how to change the curriculum so school is still relevant"... and the important box is the second box. But Stefan Bauschard offers two statements on the second that seem to me to be just wrong. The first is this: "future success in work or entrepreneurship will be determined by how well you manage agent teams." Why would we need to manage agent teams? Let the AI do that. All we need to do is tell the AI what we want. Second: "the most important question facing society is who gets to decide what AI does." Why does everyone refer to 'AI' in the singular. Just are there are many people - billions, even - there will be many AIs. The real question for the future is: how many of those billions of people get to benefit from an AI? If it's less than 'billions of people' we have hard-wired an unsustainable inequality into society, with all the harm that follows from that.

Today: Total: [] [Share]