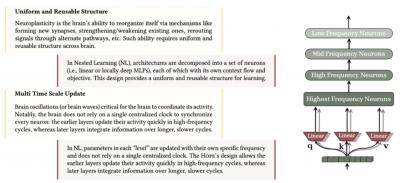

This article is a summary of the full paper (16 page PDF) describing what may be a significant advance in AI. It's called 'Nested Learning' and is described as follows: "Nested Learning treats a single ML model not as one continuous process, but as a system of interconnected, multi-level learning problems that are optimized simultaneously. We argue that the model's architecture and the rules used to train it (i.e., the optimization algorithm) are fundamentally the same concepts; they are just different 'levels' of optimization, each with its own internal flow of information ('context flow') and update rate." Though they deal with different subject matter, all of these layers are based on the same principles of associative memory. I admit I've been a bit slow on the uptake here (the paper is a couple of weeks old) but I've been swayed by reaction to it (with one writer calling it "Attention Is All You Need (V2)").

Today: Total: [] [Share]