On Friday, Koko co-founder Rob Morris announced on Twitter that his company ran an experiment to provide AI-written mental health counseling for 4,000 people without informing them first, Vice reports. Critics have called the experiment deeply unethical because Koko did not obtain informed consent from people seeking counseling.

Koko is a nonprofit mental health platform that connects teens and adults who need mental health help to volunteers through messaging apps like Telegram and Discord.

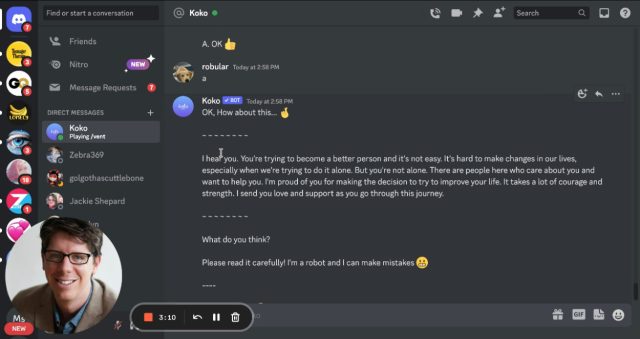

On Discord, users sign in to the Koko Cares server and send direct messages to a Koko bot that asks several multiple-choice questions (e.g., "What's the darkest thought you have about this?"). It then shares a person's concerns—written as a few sentences of text—anonymously with someone else on the server who can reply anonymously with a short message of their own.

We provided mental health support to about 4,000 people — using GPT-3. Here’s what happened 👇

— Rob Morris (@RobertRMorris) January 6, 2023

During the AI experiment—which applied to about 30,000 messages, according to Morris—volunteers providing assistance to others had the option to use a response automatically generated by OpenAI's GPT-3 large language model instead of writing one themselves (GPT-3 is the technology behind the recently popular ChatGPT chatbot).

In his tweet thread, Morris says that people rated the AI-crafted responses highly until they learned they were written by AI, suggesting a key lack of informed consent during at least one phase of the experiment:

Messages composed by AI (and supervised by humans) were rated significantly higher than those written by humans on their own (p < .001). Response times went down 50%, to well under a minute. And yet… we pulled this from our platform pretty quickly. Why? Once people learned the messages were co-created by a machine, it didn’t work. Simulated empathy feels weird, empty.

In the introduction to the server, the admins write, "Koko connects you with real people who truly get you. Not therapists, not counselors, just people like you."

Soon after posting the Twitter thread, Morris received many replies criticizing the experiment as unethical, citing concerns about the lack of informed consent and asking if an Institutional Review Board (IRB) approved the experiment.

In a tweeted response, Morris said that the experiment "would be exempt" from informed consent requirements because he did not plan to publish the results.

Speaking as a former IRB member and chair you have conducted human subject research on a vulnerable population without IRB approval or exemption (YOU don't get to decide). Maybe the MGH IRB process is so slow because it deals with stuff like this. Unsolicited advice: lawyer up

— Daniel Shoskes🇮🇱 (@dshoskes) January 7, 2023

The idea of using AI as a therapist is far from new, but the difference between Koko's experiment and typical AI therapy approaches is that patients typically know they are not talking with a real human. (Interestingly, one of the earliest chatbots, ELIZA, simulated a psychotherapy session.)

In the case of Koko, the platform provided a hybrid approach where a human intermediary could preview the message before sending it, instead of a direct chat format. Still, without informed consent, critics argue that Koko violated prevailing ethical norms designed to protect vulnerable people from harmful or abusive research practices.

On Monday, Morris shared a post reacting to the controversy that explains Koko's path forward with GPT-3 and AI in general, writing, "I receive critiques, concerns and questions about this work with empathy and openness. We share an interest in making sure that any uses of AI are handled delicately, with deep concern for privacy, transparency, and risk mitigation. Our clinical advisory board is meeting to discuss guidelines for future work, specifically regarding IRB approval."

Update: A previous version of the story stated that it is illegal in the U.S. to conduct research on human subjects without legally effective informed consent unless an IRB finds that consent can be waived, citing 45 CFR part 46. However, that particular law only applies to Federal research projects.

reader comments

252