Tools for Building Tutors, and Tutors for Computing Education

June 29, 2010 at 4:15 pm 7 comments

I took a workshop this morning on building intelligent tutoring systems. That’s surprising if you knew me even 10 years ago, when I thought that intelligent tutoring systems were an interesting technology but a bad educational idea. I thought that tutors were the fancy worksheets that I thought deadened education and taught only the kinds of things that weren’t worth teaching. Then I spent the last eight years trying to figure out how to teach computing to people who do want to learn about computing but don’t want to become professional software developers (i.e,, Media Computation).

- I’ve come to realize that there are students who need drill-and-practice kinds of activities to succeed, for whom discovery or inquiry learning is more effort than it’s worth. I recognize that in myself — I find economics fascinating and enjoy reading about it, but I’m not interested enough in economics to (for example) sit for hours with an economic simulator to figure out the principles for myself.

- I also now believe that even those students who do want to discover information for themselves still need a bunch of foundational knowledge on which to base their discoveries. A student who wants to figure out something about computing using Python, still has to learn enough Python to be able to use it as a tool. It’s not worth anybody’s time to learn Python syntax through trial-and-error discovery or inquiry learning.

I am now interested in tools like intelligent tutoring systems to help students learn foundational skills and concepts as efficiently as possible.

The workshop this morning was short, only three hours long. Still, we all built simple model-tracing tutors for a single mathematics problem, and I think most of us started building a tutor for something that we were interested in. I started building a tutor that would lead a student through writing the decreaseRed() function that we start with in both the Python and Java CS1 books.

The Cognitive Tutor Authoring Tools (CTAT) that the CMU folks have built are amazingly cool! They’ve built Java and Flash versions, but the Flash version is actually totally generic. Using a socket-based interface, the CTAT for Flash tool can observe behavior to construct a graph of potential student actions, which can labeled with hints, structure for success/failure paths, made ordered/unordered, and made generic with formulas. The tool can also be used for creating general rule-based tutors. CTAT really is a general tutoring engine that can be integrated into just about any kind of computational activity. I’m still wrapping my head about all the ways to use this tool.

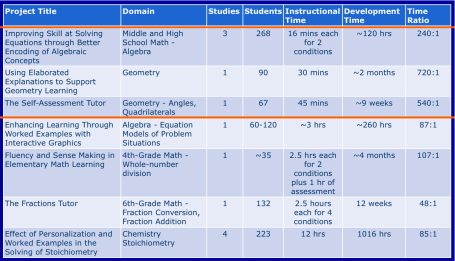

My biggest “Aha!” (or maybe “Oh No!”) moment came from this table:

First, I’d never realized that 30 minutes of activity in the famous Geometry Tutor took two months to develop! The whole point of the CTAT effort is to reduce these costs. This table gave me new insight into what it’s going to take to meet President Obama’s goal of computational, individualized tutors. A typical semester course in college is about three contact hours and 10-15 hours of homework per week for 15 weeks. Let’s call it 13 hours of scripted learning activity a week, for a total 195 hours. The best ratio on that table is 48:1 — 48 hours of development for one hour of student activity. 9360 development hours (for those 195 hours at a 48:1 ratio), at 40 hours per week, is just over four person-years of effort to build a single college semester course. That’s not beyond reason, but it is certainly a sobering number. A full year high school course, at 45 minutes a week, five days a week, for 30 weeks is 112.5 student hours, which is (again using best case of 48:1) 5400 development hours. Two person-years of effort is a minimum to produce a single all-tutored high school course.

Here’s another great role for computer scientists: Build the tools to make these efforts more productive, and make the tools easier to use and easier to understand so that a wider range of people can engage in the effort. CTAT is great, but still requires a hefty knowledge and time investment. Can we make that easier and cheaper?

Entry filed under: Uncategorized. Tags: educational technology, high school, higher education, undergraduates.

7 Comments Add your own

Leave a comment

Trackback this post | Subscribe to the comments via RSS Feed

1. Raymond Lister | June 30, 2010 at 12:31 am

Raymond Lister | June 30, 2010 at 12:31 am

I think computer-based tutorials will not be successful in any area where it is necessary for the computer system to reason about what the student may be thinking, and a good human-based pedagogy is not yet developed – I think the computer programming is an area that lacks a good human-based pedagogy.

President Obama’s goal of computational, individualized tutors does remind me, in its philosophical orientation, of the 1980s Japanese Fifth Generation Project.

If the above assertion resonates with the reader, then I recommend that you try reading Winograd and Flores, “Understanding, Computers and Cognition”. That book is not an easy read for most computer scientists, given our undergraduate and postgraduate education in the rationalistic tradition, but it probably is the most accessible read for computer scientists.

2. Mark Guzdial | June 30, 2010 at 10:22 am

Mark Guzdial | June 30, 2010 at 10:22 am

Raymond, do you forego giving students textbooks or other pedagogical tools, because we don’t yet have good human-based pedagogy for computer science? When I was in Education grad school, one of the definitions of Education that was offered was “Psychology Engineering.” Engineers often have to invent processes and rules-of-thumb before the science gets there. As educators, we’re in the same place — we have to teach people, even if we don’t know have the science telling us how it all works yet.

I used to work with Chemical Engineers on tools to teach modeling. We built models of these large chemical engineering plants. I’d ask them, “Okay, now what happens as we start the system.” And the answer was, “Really, we don’t know.” Most of Chemical Engineering explains the steady-state. Starting up a refinery or pumping system is a sort of black art — they’re learning all the time, but making it all work is still a matter of engineers who know how to do it even if they can’t explain what’s really happening.

Sometimes, we educators have to do that, too. The evidence I’ve seen on cognitive tutors is promising. The work on tools like Practice-It and CodeBat is excellent and much needed. More tutors is continued work in this vein. I’m not trying to build another “The Coordinator.” I’d like to build more tools for the computing teachers’ toolchest.

3. Raymond Lister | July 2, 2010 at 2:16 am

Raymond Lister | July 2, 2010 at 2:16 am

Mark wrote, “Raymond, do you forego giving students textbooks or other pedagogical tools, because we don’t yet have good human-based pedagogy for computer science?”

I think that’s not quite the right analogy. You are not proposing to *use* existing automated “cognitive tutors”, but to *build* them. So a better analogy would be “Raymond, do you *write* textbooks when you don’t believe you have a good human-based pedagogy for computer programming?” To that question, my answer is, “I haven’t written a textbook for that very reason”.

Another problem with the analogy is that doesn’t distinguish between teaching practice and CSEd research. What I do in my teaching practice is bricolage, which Wikipedia describes as “to make creative and resourceful use of whatever materials are at hand”. (For a more sophisticated description of bricolage, and the difference between bricolage and science, the interested reader might try to read the book by Claude Lévi-Strauss ‘The Savage Mind’ – but I confess I found it to be a very hard read.) In the *practice* of teaching, as part of that bricolage, I do make creative use of whatever materials are at hand, such as bits and pieces out of textbooks, and perhaps bits and pieces from computer-based tools, but it doesn’t follow that my bricolage also defines my research.

[One more thing about this textbook analogy, perhaps just an amusing non sequitur … last time I taught a class, over 90% of the class did NOT even BUY the textbook! Almost all those students passed, and many achieved high grades, so maybe those students know something about textbooks and learning that I don’t know!]

Mark wrote, “When I was in Education grad school …”

Your graduate school experience is clearly the dominant influence on your CSEd research orientation. And why not? You were in the right place for that time. Soloway and his associates did the right sort of research for their time, and they did it about as well as anybody could. But the difference between your CSEd research orientation and my CSEd research orientation is how we each view the Soloway et al. legacy 20+ years later. You see myself and some other CSEd researchers as people who are not sufficiently aware of Soloway et al. ‘s work , and as a consequence we are at best doomed to repeat the Soloway et al. work. For example, in a blog posting some months ago, you characterized the work that I and others did as part of the ITiCSE 2004 Leeds working group as a recapitulation of work in Spohrer’s thesis. My motivation to start that ITiCSE working group was a desire to explore a new philosophical approach to studying the novice programmer, and from that perspective the Leeds working group paper describes research that is fundamentally different from the research in Spohrer’s thesis, and all work in the Soloway tradition.

I can’t prove it, not yet, but my hunch is that Soloway et al. ‘s work was a necessary research program that turned out to be a failure. (I’m using “research program” in the sense that Lakatos used it.) That Soloway et al. ‘s work failed is in no way a criticism of their work at that time. Soloway et al. ‘s work was a research program we had to have before we could move on to better ways of thinking about novice programmers. In my work from 2004 onwards, I’ve been trying to develop a new way of thinking about the novice programmer.

Maybe your view of the Soloway legacy will turn out to be right, and mine wrong. Both of our positions are open to an empirical answer, and only time will tell who is right. (And maybe both of us are wrong.) However, I disagree profoundly when you express implicitly the view that work on cognition in the rationalist/computational tradition (e.g. Soloway and Anderson) is something that is based upon a self-evident truth, the only possible truth. Such a view denies legitimacy to research based in other traditions.

While it is contrary to the traditional university education of a computer scientist to imagine non-rationalist / non-computational ways for describing the mind of the novice programmer, alternative ways of describing human thinking have been articulated outside of computer science. Most of that work traces its roots to the philosophers Merleau-Ponty and Heidegger. I won’t pretend to understand that foundational work by those two philosphers, but I feel I do understand the interpretation of that work, in the context of artificial intellengence / cognitive scence, by Hubert Dreyfus (see his book “What Computers Can’t Do”, subsequently and amusingly republished with the title “What Computers Still Can’t Do”.) There’s also the biologically inspired way of describing human thinking, which has its roots in people like Maturana and Varela. (As I posted earlier, the most accessible read on that work is the book by Winograd and Flores, `Understanding Computers and Cognition’.) My own most recent work is influenced by the bio-psychological work of the neo-Piagetians.

Mark wrote, “I used to work with Chemical Engineers on tools to teach modeling. We built models of these large chemical engineering plants. I’d ask them, “Okay, now what happens as we start the system.” And the answer was, “Really, we don’t know.” Most of Chemical Engineering explains the steady-state. Starting up a refinery or pumping system is a sort of black art …”

If I may borrow and distort your analogy for my own evil ends … Just as chemical engineers don’t attempt to formally model what they don’t understand, I hesistate to build formal models of something that I don’t understand – how novice programmers learn. Also, to run with the chemical analogy some more, the human mind is *never* in a steady-state, and requires a non-traditional way of thinking, a way of thinking pioneered by Nobel Prize winning chemist Ilya Prigogine. His popular science book, “Order Out of Chaos” offers a non-rationalist way of thinking about all non-linear systems, not just chemical systems, and I have found his ideas to be a fertile source of inspiration for my own thinking about novice programmers.

4. Mark Guzdial | July 3, 2010 at 1:48 pm

Mark Guzdial | July 3, 2010 at 1:48 pm

Raymond, are you familiar with Ralph Waldo Emerson’s essay The American Scholar? His model of the scholar is one that gets his hands dirty (“I grasp the hands of those next me, and take my place in the ring to suffer and to work”) and actually does something:

Scholarly analysis is appropriate for theory-building. In education especially (where we are so bad at predicting outcomes from theory), the test of any theory is an intervention. Educational theory is only valuable if it is useful in prediction or in design of an intervention.

Raymond, you should be aware that the “instruction as engineering psychology” idea came from Robert Glaser (not Elliot Soloway), past president of the American Educational Research Association and awardee of the American Psychological Association’s award for contributions to education. The tradition you are rejecting is not just Soloway and Anderson, but most of cognitive science, education, and psychology today. You’re absolutely right — it could all be wrong. But that’s where I see the best evidence, and that’s the approach I’m building upon.

My job as a professor and as a researcher is to further that work in which I find value, and to ignore or explicitly critique and reject work in which I do not find value. I’m not making any claims about “self-evident” or “only possible truth.” I’m making judgments based on my interpretation of the available data and analyses.

You may have this forum (my blog) confused with a journal, which should publish papers that present sound evidence supporting theory, coming from any tradition. This is my blog where I represent what I think. It’s part of my job to reject theory that I believe is erroneous or unsupported. If you have evidence to support another theory, please do publish it or put it in your own blog. If I agree with you, I’ll build on it, too.

I didn’t claim that your work at ITICSE 2004 was a “recapitulation” of Spohrer’s work. I said that Spohrer’s theory could be used to explain your results. That’s something we look for in good theory, that it can be predictive and explanatory. I believe that you that started from a different approach. In my opinion, your theory and approach has not yet achieved something beyond the rationalist/computationalist perspective that you reject. I DO hope that you do — that would be a real achievement and a contribution to the community.

5. Alan Kay | June 30, 2010 at 7:58 am

Alan Kay | June 30, 2010 at 7:58 am

Hi Mark

As you know, I’ve been spending about a week a month in DC trying to get all concerned, including funders, to set up a community of research to get to the next important levels of this. Personal computing-and-Internet was a 12 year effort and had about 15 institutions involved.

The original Plato system at the U of Illinois produced about 10,000 hours of “courseware”, of which they deemed about 2000 to be good (by the standards of the times). The good stuff took about 100 author hours to make one good student hour. So this was daunting then, and is still daunting today.

But it isn’t so surprising given what has to be done. And if a text or source book provides “a chapter a week” of instruction, then it is likely that the author put in a lot of hours for each student hour also.

This splits the problem. There are “a lot of 3rd graders” and good brute force courseware “John Seely Brown” style, even if it cost a million dollars an hour to produce easily amortizes over the number of learners and the years that the courseware is relevant.

(One can worry about bad courseware, just as we do worry about the many bad text books ….)

However, when we set up to invent personal computing at PARC, part of our plan was the realization that we could cover a lot of ground for the entire problem if we could invent desktop publishing — this would provide both the UI scheme, and also provide the link to the authoring that the end users would need to do.

And I think the general problems of making computer tutors are also linked with something like this. We need to invent a general very capable authoring environment for people who want to make “dynamic books that help people learn”. I’ve spent a little time talking to the very impressive Ken Koedinger (the force behind a lot of the Carnegie Learning stuff, including the Geometry Tutor and the CTAT tools), and he agrees.

I see this as a 10 year research thrust by a community of researchers. (I think it should start by doing a brute force but completely comprehensive tutor for some subject of importance, that can be improved by experience, and can act as a model and target for systems that can make such tutors more automatically from less direct advice.)

Cheers,

Alan

6. Mark Guzdial | June 30, 2010 at 10:31 am

Mark Guzdial | June 30, 2010 at 10:31 am

Hi Alan,

I figured that this blog post was in line with your agenda. One of the parts of the Pittsburgh Science of Learning Center (PSLC) effort that I found intriguing was the coordinated effort to gather data across ALL their tutors, those created by CMU/Pitt and those created by others using their tools. They have an enormous corpus, and they’re letting the data miners have at it.

One of their intriguing recent results was that they were able to predict Massachusetts students performance on 11th and 12th grade state-mandated standardized tests from activity in 8th grade tutors better than the 8th grade standardized tests did. That’s a really WOW! idea — to use students’ everyday work rather than performance on a one-shot test, and have the same quality of evaluative/assessment data as one would from the test.

Vincent Aleven also showed during the workshop yesterday that they collect data error rates for use in testing design assumptions. If you thought that Questions 3, 5, and 8 were all testing the same core idea, they can tell you empirically if students are improving on those questions in similar ways, i.e., the error rate curve declines across visiting those questions. That provides you the designer feedback on your design assumptions, something that is very hard to get from any other kind of pedagogical tool.

Cheers,

Mark

7. Alan Kay | June 30, 2010 at 1:02 pm

Alan Kay | June 30, 2010 at 1:02 pm

One of the (too many) things I’ve been advocating as part of a major effort to jump a few qualitative levels* in computer tutoring (and have chatted with Ken Koedinger about) would be do really do a “John Seely Brown” effort on some standard curriculum (“standard” so there are reasonable norms for comparison) doing what “BUGGY” did for 3rd grade subtraction — namely, to find essentially every bug children make (JSB and Richard Burton looked at a lot of kids, and we can look at millions now), and to try to use a variety of models to select gentle good advice and examples to show.

The other thing that can be gathered is a much more flexible “noticer” of how children get to good solutions, and this can start to rival the flexibility of good teachers in this regard.

This is far from the whole solution, and is likely to only work for very simple learning topics, but in those areas it could be made really comprehensive, really pleasant, and really foolproof. This would be a very good kind of user interface to show to a wide variety of both practitioners and skeptics.

An important thing about the impressive Carnegie stuff is that they themselves realize that their UIs are not very good (not good enough by far to be used without considerable adult help in how to use the system). So one large part of the next level here is to try to get the UIs so they really allow the learners to get into them without a lot of outside help.

(Some of the iPad apps I’ve seen are really good in this regard. And much further is possible.)

As I said, I think this is a 10 year research effort by a number of research groups in a community of interest, but I think there are a variety of paths for partial solutions, and that quite a bit to help students can be done without having to invent general AI.

I’m particularly interested in what can be done in K-8 UIs that can help the children use their considerable intelligence to notice and focus.

Cheers,

Alan