The technology changes of the past three decades continue to be bounded by challenges that impede our efforts to effectively exploit the full value of IT investments.

Plus ça change, plus c'est la même chose.

("The more things change, the more things stay the same.")

January 24, 1984, will be remembered in the technology world and elsewhere as the day that Apple launched the Macintosh computer. In the crowded Flint Center at De Anza College, a community college across the street from the Apple campus in Cupertino, California, Steve Jobs pulled a beige computer out of a gray travel bag and formally introduced the Mac to the world.1

Less known or remembered about the day is that concurrent with the Macintosh launch at De Anza College, undergraduates at Drexel University in Philadelphia were the first college students anywhere in the world to see the Mac up close and personal. In the run-up to the 1984 Macintosh launch, Apple negotiated agreements with some two dozen, primarily private, Ivy League or other highly selective colleges and universities to sell Macs to college students for $1,000 (well below the retail price of $2,495). Drexel, then viewed by many as a blue-collar engineering school that lived in the shadow of the University of Pennsylvania, was somewhat of an outlier in the group of largely elite institutions that made up the Apple University Consortium (AUC). Yet Drexel, under the technology leadership of Brian Hawkins (who would become the founding president of EDUCAUSE fourteen years later), was the first college or university to sign a Macintosh purchase agreement with Apple, in the spring of 1983; moreover, the Drexel agreement, unlike most of the other AUC contracts, provided a Mac to all first-year students. And so because Drexel made the early and significant commitment to Apple, Drexel students were the first in the nation to get Macs through campus-purchase programs.

Given the current ubiquity of technology on college and university campuses and in the consumer market, it can be difficult to understand the excitement and impact of the first generation of personal computers—IBM PCs and Apple Macs—some thirty years ago. The arrival of these devices on campuses was the catalyst for what, in 1986, EDUCOM Vice President Steve Gilbert and I called the "new computing" in higher education: "Thousands of faculty members and administrators have decided that 1986 is the year that they will have a personal relationship with computing. . . . Most academics now getting started on computing are professionals who haven't been computer users before and who will never think of themselves as computer experts. What they realize is that they are embarking on a journey they can no longer delay."2

Great Expectations

That technology journey— our technology journey—has taken all of us many places over the past three decades. The journey has been fueled, in part, by great expectations for the use of new technologies in education—expectations that were articulated well before the first PCs and Macs even arrived on college and university campuses. For example, in a 1913 newspaper interview, the prolific inventor Thomas Edison proclaimed: "Books will soon be obsolete in the public schools. Scholars will be instructed through the eye. It is possible to teach every branch of human knowledge with the motion picture. Our school system will be completely changed in ten years."3

A half century later, in a 1966 Scientific American article, Stanford Professor Patrick Suppes observed: "Both the processing and the uses of information are undergoing an unprecedented technological revolution." Anticipating that technology could bring great benefits to education, Suppes added: "One can predict that in a few more years millions of schoolchildren will have access to what Philip of Macedon's son Alexander enjoyed as a royal prerogative: the personal services of a tutor as well-informed and responsive as Aristotle."4

Note that Edison's 1913 prediction for the demise of books in public schools was offered eleven years before sound came to motion pictures. Suppes's 1966 prediction of the digital Aristotle was published just a year after IBM released the game-changing IBM 360 mainframe computer and about a decade before the arrival of the Apple II computer. Edison did not secure his fame or fortune by providing motion pictures that would supplant textbooks in public schools. Suppes, however, went on to co-found the Computer Curriculum Corporation in 1967, one of the first companies to create instructional software for education.

A more sobering view of the challenges involved in deploying technology resources in education was put forth in 1972 by George W. Bonham, founding editor of Change magazine, an influential publication in higher education:

For better or worse, television today dominates much of American life and manners. . . . Part of [the] lackluster record of the educational uses of television is of course due to the heretofore merciless economies of the medium. But fundamental pedagogic mistrust of the medium remains also a fact of life. The proof of the pudding lies in the fact that on many campuses today fancy television equipment . . . now lies idle and often unused. . . . Academic indifference to this enormously powerful medium becomes doubly incomprehensible when one remembers that the present college generation is also the first television generation.5

Substitute the word technology for television, and Bonham's assessment speaks volumes about similar challenges inherent in current efforts with information technology: the high costs of creating useful and effective instructional content, pedagogic distrust (or, at a minimum, ambivalence) about the impact and benefits of instructional content and technologies, and expensive equipment lying fallow on college and university campuses. Moreover, the irony of Bonham's assessment is that the "present college generation" he referenced in 1972 is the middle-aged (and older) members of today's professorate!

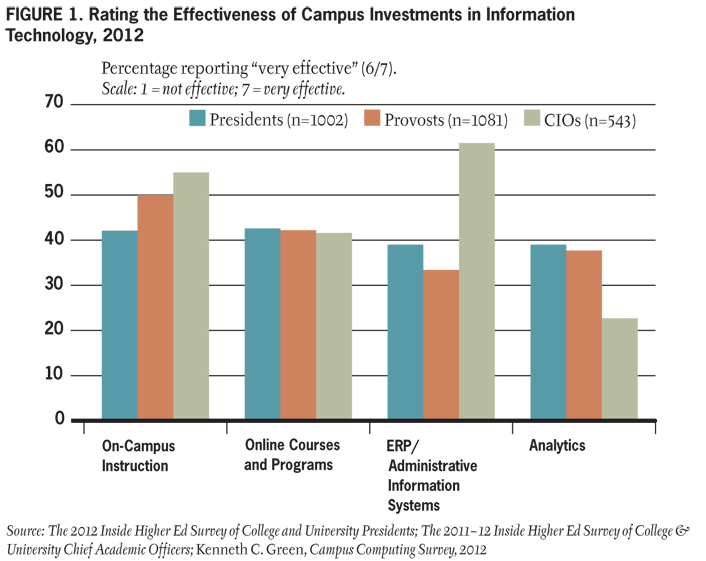

Campus leaders' perspectives on the impact and effectiveness of the institutional investment in information technology are reflected in three roughly concurrent surveys that I conducted from December 2011 to September 2012. The three groups of survey participants—presidents, chief academic officers, and chief information officers (CIOs) across all sectors of higher education—were asked to assess eight separate areas of technology investment: academic support services; alumni services; on-campus instruction; online instruction; libraries; management and operations; research and scholarship; and student services. For the purposes of this discussion, it is useful to look at the responses of presidents, provosts, and CIOs on the "big four" issues: on-campus instruction; online instruction; management and operations; and analytics.

As shown in figure 1, less than half (and often just 40 percent) of presidents and provosts assessed campus IT investments to be "very effective" (a 6 or 7). CIO assessments were slightly different from those of presidents and provosts, but not by much—except for the over 60 percent of CIOs who assessed the institutional investment in "administrative information systems and operations" as "very effective."6

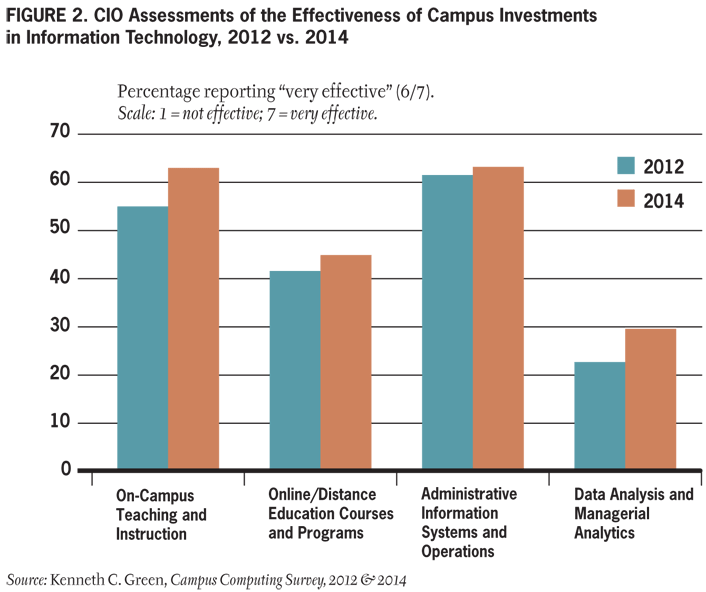

By 2014, the percentage of CIOs reporting "very effective" on these same four metrics improved slightly (see figure 2); comparable data for presidents and provosts is not available.

Unfortunately, the data provides clear evidence that the great expectations for technology to aid and improve instruction, operations, and data analysis have fallen short. Over the years, both technology providers and campus technology advocates/evangelists may have contributed to unrealistic expectations about how quickly an investment in information technology could deliver expected gains in instructional outcomes or institutional performance and productivity. A key responsibility of and challenge for IT leaders is to manage expectations and to communicate the effectiveness of IT investments. We can—and must—do better.

Plus Ça Change

The technologies that are pervasive and ubiquitous both on and off campus today have changed dramatically since the arrival of the first PCs and Macs in the mid-1980s. Without question, we have witnessed amazing technological change (the more things change) over the past three decades: hardware, software, the Internet, wireless networks that foster mobility, digital content, social media, and "big data" analytics. However, at least in the higher education arena, this change has been bounded by continuing challenges that often impede efforts to leverage and effectively exploit the full value of technology investments (the more they stay the same). These challenges largely involve the enabling infrastructure: integrating technology into instruction; improving productivity; furthering online education; recognizing and rewarding faculty who "do IT" in instruction; using data to aid and inform decision making; and managing expectations about information technology.

In many ways, colleges and universities now lag behind where they once led. Consumer markets and corporations move and adopt more quickly. Consequently, higher education's concurrent delay in implementation and effective exploitation of many common consumer- and corporate-sector technologies causes many students, faculty, administrators, board members, and other observers to ask: "Why can I do these things off campus but not on campus?"

Three decades into the much-discussed and often overhyped technology revolution in higher education, it is increasingly clear that the major technology challenges confronting higher education are not about technology per se (i.e., the things we buy). Rather, as EDUCAUSE publications have often noted, the challenges involve how we deploy various technologies effectively (the things we do with technology). And I would argue that perhaps most important, these challenges are about the people, program, policy, planning, political, and budget issues that often impede implementation efforts.

Technology Priorities

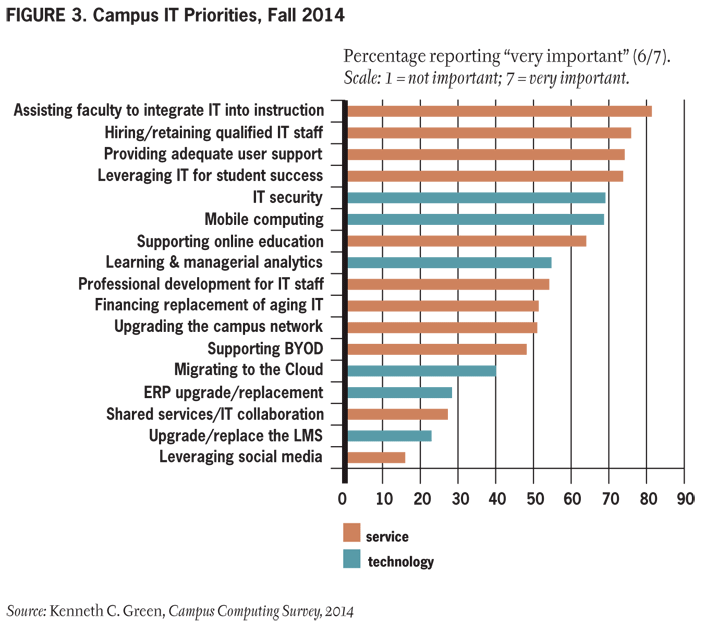

We should not be surprised that the biggest technology challenges focus on continuing efforts with the instructional integration of IT resources. That's the clear message that emerges from more than a decade of data about IT priorities from the annual Campus Computing Survey of CIOs and senior campus officials. For example, in the fall of 2014, 81 percent of the CIOs and senior IT officers participating in the survey identified the instructional integration of information technology as a "very important" campus IT priority over the next two to three years (see figure 3), followed by "hiring/retaining qualified IT staff" (76 percent), "providing adequate user support" and "leveraging IT for student success" (both at 74 percent), and "IT security" (69 percent). And although the fall 2014 numbers for top IT priorities vary slightly by sector (e.g., research universities vs. community colleges), there is great consistency about the top three items across sectors: instruction, staffing, and user support.7

How is higher education addressing the top technology priority of instructional integration of IT resources? Are colleges and universities assessing their work in this area?

Unfortunately, most institutions over the past decade or so have not invested in formal efforts to assess the impact of technology in instruction and learning; moreover, whereas some sectors have experienced modest gains, the numbers have declined between 2002 and 2014 for public research and private research universities (see table 1) and generally remain lower overall: under 30 percent across all sectors in the fall of 2014. Without a commitment to evaluating investments in innovation, we can't know what works.

Table 1. Campuses with a Formal Program to Assess the Impact of Information Technology on Instruction and Learning Outcomes

| 2002 (%) |

2005 (%) |

2008 (%) |

2011 (%) |

2014 (%) |

Percent Change 2002–2014 |

|

|---|---|---|---|---|---|---|

| All Institutions | 19.8 | 35.9 | 43.6 | 40.5 | 23.2 | 17.1 |

| Public Research Universities | 29.2 | 39.5 | 52.0 | 46.7 | 26.2 | -11.3 |

| Private Research Universities | 34.2 | 35.4 | 52.3 | 50.0 | 26.2 | -23.4 |

| Public BA/MA Institutions | 18.7 | 42.3 | 45.3 | 40.9 | 29.8 | 43.3 |

| Private BA/MA Institutions | 13.2 | 31.0 | 35.6 | 32.8 | 15.8 | 19.7 |

| Community Colleges | 21.7 | 34.4 | 45.1 | 44.5 | 27.5 | 26.7 |

Source: Kenneth C. Green, Campus Computing Survey (selected years)

The Productivity Conundrum

Recent reports suggesting that productivity in the United States has declined should be a catalyst for the continuing conversation about productivity and technology in higher education. Some productivity experts argue that the productivity-enhancing impacts of new technologies (e.g., iPhones, social media) are less than the productivity gains that followed the emergence of the personal computer and the Internet three decades ago.8 And then there is the May 2015 assessment by Paul Krugman, a Princeton University economics professor and Nobel laureate: "The whole digital area, spanning more than four decades, is looking like a disappointment. New technologies have yielded great headlines, but modest economic results." Krugman added: "New technology is supposed to serve businesses as well as consumers, and should be boosting the production of traditional as well as new goods [and services]. The big productivity gains of the period from 1995 to 2005 came largely in things like inventory control, and showed up as much or more in nontechnology businesses like retail as in high-technology industries themselves. Nothing like that is happening now."9

"Great headlines, but modest results" (to paraphrase Krugman) might also be an appropriate characterization of technology and productivity in higher education. Admittedly, economists have a precise definition for productivity and specific metrics for measuring productivity. However, you'll need more than a good economics textbook to secure consensus on the meaning of "academic productivity" among faculty and administrators, with the latter recognizing the need to improve "academic productivity" as a way to reduce (or at least contain) the rising cost of higher education.

Indeed, much as we struggle with the meaning and attributes of quality in higher education, so too do we struggle with the meaning and attributes of productivity, particularly in the context of campus investments in and expenses for technology in research, instruction, operations and management, and support services. Yet there is an implied expectation that IT investments in higher education should result in improved productivity. The questions then become: How would we know? What should we measure?

Yes, we can probably measure productivity in the research domain, and it is likely significant. Simulations and analysis that once consumed huge computer resources for computation and storage and that previously required significant (and expensive) mainframe time are now routinely run, often quickly, on notebook, desktop, and lower-cost supercomputers.

It is in the other domains—instruction, operations and management, and support services—that the conversation about technology and productivity becomes problematic. To be sure, many of higher education's IT investments over the past three decades have made improvements in these domains. The Internet has brought rich digital content to students and faculty across all sectors, segments, and disciplines. Online registration and fee payment have saved countless students countless hours previously spent standing in long registration and payment lines at the beginning of each term. Analytic systems now automatically pour data into dashboards as part of the effort to aid and inform decision making. Monitoring systems send automatic e-mails to students and faculty when they detect signs that students may be falling behind in coursework. But in many ways, these examples reside at the margin, not the core, of the productivity conundrum. Despite these gains, higher education costs have not declined. The understanding that technology will enhance productivity and consequently reduce costs has not played out in most sectors of American higher education.

Although campus IT budgets may ebb and flow somewhat during good and bad economic times, institutions continue to spend significant sums on information technology—about 5 to 6 percent of the total institutional operating budget—for instruction, operations and management, and support services.10 Yet few would argue that individual institutions, as well as higher education as an "industry," are now more productive because of IT expenditures and investments.

MOOCS and Online Education

Online enrollments have exploded over the past fifteen years.11 Online courses and programs would seem to be the one higher education domain that could showcase the productivity gains from technology investments.

Yet despite the big gains in online enrollments across all sectors and segments, it is not clear that "going online" has reduced costs and increased instructional or institutional productivity. Part of the problem is that colleges and universities do not do a good job of dealing with cost accounting—particularly cost accounting for instructional programs. Instructional "costs" in online programs are often taken as the direct cost of faculty time, with little acknowledgment of any of the other costs involved in developing and supporting online programs: course and content development, the use of instructional support services, the time provided by IT support staff to assistant students and faculty, and allocating the true overhead costs involved in individual online courses and programs.

The productivity pressures are reflected in the fawning endorsements for MOOCs we witnessed several years ago. Part of the attraction of MOOCs was the potential for scale—the opportunity to enroll 10,000 or 100,000 students in a single course. But completion rates are dismal (in the single digits), user support issues are significant, development costs are high, and revenues (for the institutions that seek to make money from "free" courses") may be problematic.

Over the past two years, the response to the low-completion critique of MOOCs has involved a revisionist redefinition of "course completion" in the context of student intention. That is, course completion is calculated based on those whose intent, at the time of MOOC registration, was to complete the course, rather than just browse or audit. For example, a recent HarvardX report revealed that 22 percent of the "intend to complete" registrants across nine HarvardX courses earned a certificate.12 These numbers are better than the single-digit completion rates generally reported for the larger population of MOOC registrants, but something is missing here. Where is an appropriate (indeed, necessary!) comparison number for more "conventional" online courses or more traditional classroom-based courses? In the case of the latter courses—such as developmental math, introductory psychology, organic chemistry, or Elizabethan sonnets—almost all those who register probably "intend to complete" the course. Would the faculty, department chairs, and deans who supervise these courses be satisfied with a 22 percent or even a 35 percent completion rate? Unlikely.

In this context we might consider the data from a recent study—conducted by the Community College Research Center at Teachers College, Columbia University—of online and on-campus courses at two community colleges. Students who enrolled in (non-MOOC) online courses were almost twice as likely to withdraw from or fail the class than were their counterparts in face-to-face (F2F) courses. But even here, the completion rate was 70–80 percent for students in online courses and 80–90 percent for students in F2F courses.13 Also of interest may be a 2013 study of the completion rates for some 5,700 students enrolled in either (non-MOOC) online or traditional (F2F) courses at a public comprehensive university. Although there was a statistically significant difference in the completion rates (higher for F2F courses), both the online and F2F courses reported completion rates over 93 percent.14

The course completion numbers for non-MOOC online and F2F courses cited in these two reports are more than three to four times better than the 22 percent completion rate reported for the HarvardX students "who intend" to complete MOOCS. To date, the experience with MOOCs suggests that they are one point on the continuum of online education options for students and institutions. But at present, MOOCs may at best supplement, not supplant, more conventional approaches to both online and F2F courses.

Faculty Recognition and Reward

Across all sectors, most colleges and universities loudly and proudly proclaim their commitment to the innovative use of technology in online and on-campus instruction. Concurrently, most institutions continue to ignore and, more often, punish faculty members who would like to have their efforts in innovative and effective uses of IT resources in instruction considered in the review and promotion process. The conventional wisdom often offered to younger faculty is "don't do tech" until after they have earned tenure. Indeed, the issue of recognition and reward remains one of the most daunting issues facing faculty of all ranks—but especially junior (tenure-track) faculty—as they build their scholarly portfolios.

Data from the fall 2014 Campus Computing Survey reveals that on average, two-fifths of institutions support instructional innovation with grants to help faculty redesign courses or create simulations, ranging from 26.9 percent in private BA/MA institutions to 56.9 percent in public research universities.15 Yet too few institutions have expanded the algorithm for faculty review and promotion to include technology. Consider, for example, the trend data on "review and promotion" from the annual Campus Computing Survey. The good news is that in many sectors, the proportion of institutions that have expanded the faculty review and promotion criteria to include technology has increased dramatically. The exceptions are private research universities and private BA/MA institutions, where the numbers remain largely the same as they were in 1997. But even with the gains, the numbers in all sectors are still very low: less than 25 percent in the fall of 2014 (see table 2).

Table 2. Campuses with a Formal Program to Recognize and Reward the Use of information Technology as Part of the Routine Faculty Review and Promotion Process

| 1997 (%) |

2002 (%) |

2005 (%) |

2008 (%) |

2011 (%) |

2014 (%) |

Percent Change 1997–2014 |

|

|---|---|---|---|---|---|---|---|

| All Institutions | 12.2 | 17.4 | 19.3 | 19.1 | 19.8 | 16.4 | 32.7 |

| Public Research Universities | 7.8 | 16.9 | 14.5 | 16.0 | 13.3 | 18.5 | 137.2 |

| Private Research Universities | 10.0 | 5.9 | 10.4 | 9.1 | 7.1 | 9.5 | -5.0 |

| Public BA/MA Institutions | 12.2 | 22.3 | 25.0 | 21.7 | 25.8 | 16.9 | 38.5 |

| Private BA/MA Institutions | 14.4 | 15.4 | 20.1 | 16.4 | 19.0 | 12.3 | -15.3 |

| Community Colleges | 12.1 | 18.2 | 19.8 | 24.6 | 25.5 | 23.9 | 97.5 |

Source: Kenneth C. Green, The Campus Computing Survey (selected years)

The survey data cited above provides clear evidence that despite the proclamations of presidents, provosts, and board chairs about how their institutions have made significant investments to leverage information technology for instruction, the operational decisions about tenure and promotion depend on the decisions of senior faculty and department chairs—and on whether these departmental leaders will recognize and support the technology efforts and activity of faculty into and through the review and promotion process.

If campus officials—including IT leaders—are truly committed to advancing the role of technology in instruction, then it is time for these leaders to stand up for and stand with the faculty who are doing this work, affirming the value of technology for those who wish to affirm it as part of their scholarly portfolio.

The Data Challenge

The third "A" in the accessibility, affordability, and accountability mandates of A Test of Leadership (the 2006 Spellings Commission report) was a clear directive to higher education and to college and university leaders to bring data to address the "remarkable shortage of clear, accessible information about crucial aspects of American colleges and universities."16

As I wrote in EDUCAUSE Review at the time: "The issue before [the higher education IT community] in the wake of the Spellings Commission report concerns when college and university IT leaders will assume an active role, a leadership role, in these discussions, bringing their IT resources and expertise—bringing data, information, and insight—to the critical planning and policy discussions about institutional assessment and outcomes that affect all sectors of U.S. higher education."17

Admittedly, we have made some important gains in the data domain. Technology providers have improved the range of the analytic resources they now offer campus clients. Colleges and universities are beginning to leverage real-time student data from the learning management systems and other sources to send warning notices to both faculty and students regarding course progress. On a broader scale, the EDUCAUSE Core Data Service (CDS), launched by former EDUCAUSE President Brian Hawkins in 2002, provides benchmarking data on various IT metrics. Whereas the Campus Computing Project focuses primarily on IT planning and policy issues, the CDS provides detailed institutional data on IT financials, staffing, and services.18

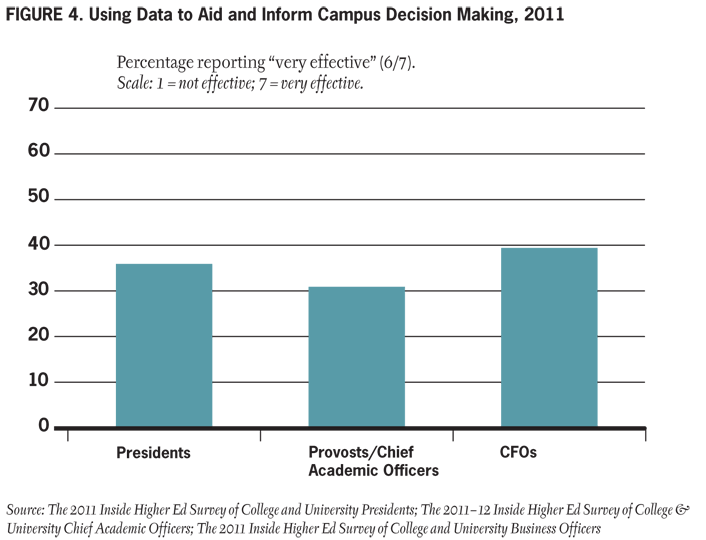

Yet in 2011, less than two-fifths of presidents, provosts, and chief financial officers ranked their institutions' use of data to aid and inform decisions as "very effective" (see figure 4). In 2012, only 22.7 percent of CIOs, 37.7 percent of provosts, and 39 percent of presidents rated their institutional investment in analytics as "very effective" (see figure 1). By 2014, just 30 percent of CIOs rated investment in analytics at their institutions as "very effective" (see figure 2).

Finally, in the effort to make better use of data for decision making, we also have to address the data culture in higher education. Too often, data is used as a weapon (What was done wrong?) as opposed to a resource (How can we do better? What's the path to doing better?). The model here should be continuous quality improvement: using data to aid and inform decision making and to help improve programs, resources, and services.

Priorities: What We Must Do Better

For the past several decades (and particularly over the past ten years), there has been much talk about the need for change in higher education. Concurrently, there has been much talk about technology as a catalyst for change. Yet as noted above, the key technology challenges that confront higher education are not about technology per se; rather, they are about our efforts to make effective use of technology resources, and they are about the people, planning, program, policy, and budget issues that often impede these efforts.

As we look ahead to the fourth decade of the technology revolution in higher education, campus and IT leaders must focus on the enabling infrastructure that will allow (a) students of all ages and backgrounds to realize their educational aspirations; (b) faculty across all disciplines and sectors to realize their instructional aspirations and scholarly goals; and (c) institutions across all sectors to do a better job of exploiting the power and potential of technology resources to enhance instruction, operations and management, and support services.

Accordingly, as we look beyond bits and bytes, beyond hardware and software, beyond network speeds and feeds, and beyond the ebb and flow of IT budgets in good times and difficult times, the priorities for information technology in higher education for the next decade are clear:

- User Support. Colleges and universities across all sectors must commit to major improvements in user training and support, including support for faculty who want to do more with IT resources in their instructional activities.

- Assessment. Opinion and epiphany cannot be allowed to dominate the conversations about institutional IT policy and planning. If colleges and universities are going to invest in technology to support instruction, they also need to assess the impact of these continuing efforts and investments in teaching, learning, and educational outcomes.

- Productivity. Much as higher education has struggled with the conversation about quality, so too will academe continue to struggle with a candid discussion about productivity. It is now time for academic leaders, including higher education's IT leadership, to have frank, candid, and public conversations about productivity: What are the appropriate metrics? What are reasonable expectations? What might technology contribute? And what are the limits of information technology in the discussion about productivity?

- Online Education. The large numbers of students enrolled in online courses and the growing number of institutions that offer online programs speak to both student interest and institutional aspirations and opportunities. The data on the educational outcomes of (non-MOOC) online courses and programs remains mixed. To do better, institutions must commit to significant and sustained efforts to evaluate their online efforts, documenting what works and what needs to improve. Concurrently, we need a new candor about the true costs of developing online programs, which includes full cost accounting for the people and the institutional resources required to support online programs and online students.

- Recognition and Reward. We must move to an expansive definition of scholarship in order to value the efforts of faculty who commit to making technology and experiments with IT resources part of their instructional portfolios. It is time for the deans, department chairs, and senior faculty who populate review and promotion committees to stand up for and stand with their colleagues who are innovating with technology.

- Data as a Resource. Higher education institutions must stop using data as a weapon against students, faculty, and programs. Rather, they should commit to using data as a resource that provides information and insight about the need for change and the path toward that change.

- The Value of Information Technology. In general, IT leaders have not done a good job of communicating the value of information technology to campus audiences. Moreover, institutional leaders must do a better job of conveying the value and impact of higher education's investments in information technology to off-campus audiences: board members, project sponsors, patrons, alumni, and government officials.

Higher education has invested significant time and money in information technology over the past three decades. And admittedly, academe has experienced some significant benefits in the areas of content and services. However, our reach continues to exceed our grasp, our aspirations continue to fall short of our implementations, and the corporate and consumer experience continues to cast a shadow over campus efforts. A renewed commitment to an enabling infrastructure, as part of our ongoing investment in IT resources, will help to ensure that the more things change, the less they will stay the same.

Notes

- Harry McCracken, "Exclusive: Watch Steve Jobs' First Demonstration of the Mac for the Public," Time, January 25, 2014.

- Steven W. Gilbert and Kenneth C. Green, "The New Computing in Higher Education," Change, May/June 1986, p. 33. See also Kenneth C. Green, "The New Computing Revisted," EDUCAUSE Review, 38, no. 1(January/February 2003).

- Frederick James Smith, "The Evolution of the Motion Picture: VI—Looking into the Future with Thomas A. Edison," New York Dramatic Mirror, July 9, 1913, p. 24. See "Books Will Soon Be Obsolete in the Schools," Quote Investigator, February 15, 2012.

- Patrick Suppes, "The Uses of Computers in Education," Scientific American 215, no. 3 (September 1966).

- George Bonham, "Television: The Unfulfilled Promise," Change 4, no. 1 (February 1972), 11.

- Kenneth C. Green, with Scott Jaschik and Doug Lederman, The 2012 Inside Higher Ed Survey of College and University Presidents; Kenneth C. Green, with Scott Jaschik and Doug Lederman, The 2011–12 Inside Higher Ed Survey of College & University Chief Academic Officers; Kenneth C. Green, Campus Computing, 2012 (Encino, CA: The Campus Computing Project, 2012).

- The top Campus Computing Survey priorities align with the number 1 and number 2 IT issues in the annual EDUCAUSE list for 2014: (1) Hiring and retaining qualified staff, and updating the knowledge and skills of existing technology staff; (2) Optimizing the use of technology in teaching and learning in collaboration with academic leadership, including understanding the appropriate level of technology to use. The EDUCAUSE list of top IT issues is developed by a panel of experts and then voted on by the EDUCAUSE community. See Susan Grajek and the 2014–2015 EDUCAUSE IT Issues Panel, "Top 10 IT Issues 2015 Inflection Point," EDUCAUSE Review 50, no. 1 (January/February 2015).

- Alan S. Blinder, "The Mystery of Declining Productivity Growth," Wall Street Journal, May 14, 2015.

- Paul Krugman, "The Big Meh," New York Times, May 25, 2015.

- Kenneth C. Green, Campus Computing, 2014 (Encino, CA: The Campus Computing Project, 2014).

- I. Elaine Allen and Jeff Seaman, Changing Course: Ten Years of Tracking Online Education in the United States (Boston: Babson Survey Research Group, 2013). See also Ashley A. Smith, "The Increasingly Digital Community College," Inside Higher Ed, April 21, 2015.

- Justin Reich, "MOOC Completion and Retention in the Context of Student Intent," EDUCAUSE Review, December 8, 2014. See also Daphne Koller, Andrew Ng, Chuong Do, and Zhenghao Chen, "Retention and Intention in Massive Open Online Courses In Depth," EDUCAUSE Review, June 3, 2013.

- "What We Know about Online Course Outcomes," CCRC, Teachers College, Columbia University, April 2013.

- Wayne Atchley, Gary Wingenbach, and Cindy Akers, "Comparison of Course Completion and Student Performance through Online and Traditional Courses," International Review of Research in Open and Distributed Learning, 14, no. 4 (September 2013).

- Green, Campus Computing 2014.

- A Test of Leadership: Charting the Future of U.S. Higher Education, A Report of the Commission Appointed by Secretary of Education Margaret Spellings (Washington, DC: U.S. Dept. of Education, 2006), 4.

- Kenneth C. Green, "Bring Data A New Role for Information Technology after the Spellings Commission," EDUCAUSE Review 41, no. 6 (November/December 2006).

- Kenneth C. Green, David L. Smallen, Karen L. Leach, and Brian L. Hawkins, "Data Roads Traveled Lessons Learned," EDUCAUSE Review 40, no. 2 (March/April 2005); Leah Lang, "Benchmarking to Inform Planning The EDUCAUSE Core Data Service," EDUCAUSE Review 50, no. 3 (May/June 2015).

Kenneth C. Green, the first recipient of the EDUCAUSE Leadership Award for Public Policy and Practice (2002), is the founding director of The Campus Computing Project. Launched in 1990 and celebrating its 25th year in 2015, Campus Computing is the largest continuing study of the role of e-learning and IT planning and policy issues in American higher education. Green's Digital Tweed blog is published by Inside Higher Ed.

© 2015 Kenneth C. Green. The text of this article is licensed under the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

EDUCAUSE Review, vol. 50, no. 5 (September/October 2015)